...making Linux just a little more fun!

June 2010 (#175):

- Mailbag

- 2-Cent Tips

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- NAT routing with a faulty uplink, by Silas Brown

- Away Mission - Upcoming in June: SemTech, USENIX, OPS Camp, by Howard Dyckoff

- OPS Camp and DevOps Days - coming in June, by Howard Dyckoff

- Pixie Chronicles: Part 3 PXE boot, by Henry Grebler

- Maemo vs Android, by Jeff Hoogland

- Hacking RPMs with rpmrebuild, 2nd Edition, by Anderson Silva

- HelpDex, by Shane Collinge

- Ecol, by Javier Malonda

- XKCD, by Randall Munroe

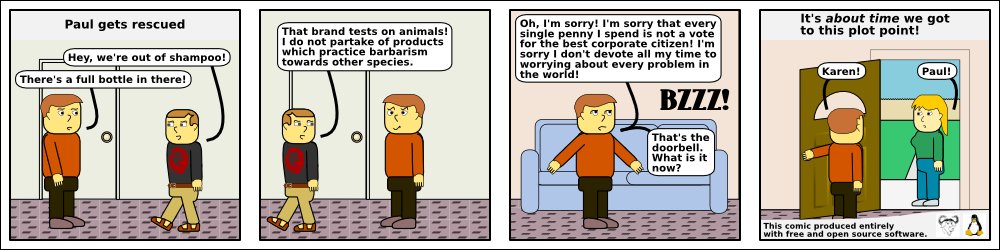

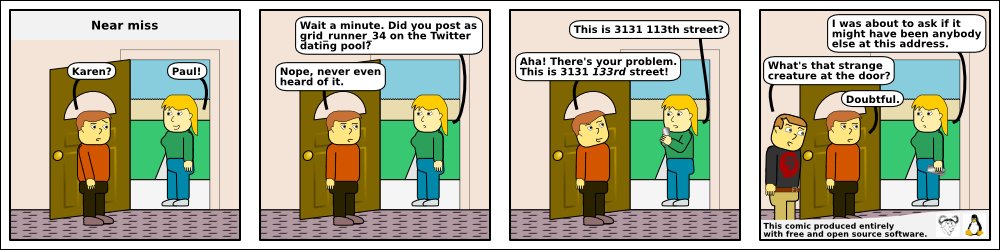

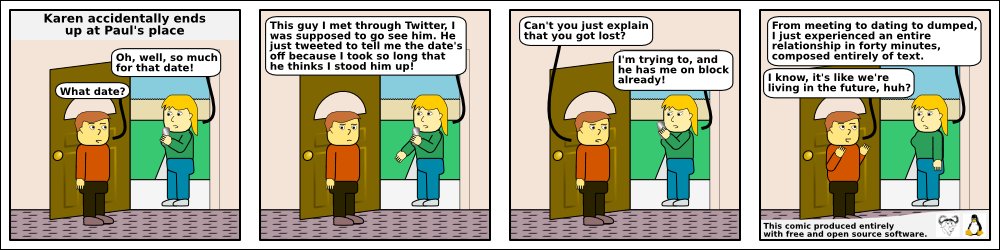

- Doomed to Obscurity, by Pete Trbovich

Mailbag

This month's answers created by:

[ Ben Okopnik, René Pfeiffer, Neil Youngman, Steve Brown ]

...and you, our readers!

Our Mailbag

Testing new anti-spam system, news at 11.0.0.0

Ben Okopnik [ben at linuxgazette.net]

Sun, 9 May 2010 17:41:43 -0400

Hi, all -

I'm currently trying out a new anti-spam regime on my machine; it's a

sea-change from what I've been trying up until now (SpamAssassin, etc.)

I'm tired of "enumerating badness" - i.e., trying to figure out who the

Bad Guys are and block them. Instead, I've hacked up a procmail-based

challenge-and-response system.

The operation of this gadget isn't all that complicated:

0) Copy all emails to a backup mailbox.

1) Archive mail from any of my bots, list-reminders, etc.

2) Deliver mail from any lists I'm on.

3) Dump any blacklisted senders.

4) Deliver any whitelisted ones.

5) Check headers to see if it's actually from me; deliver if so...

6) ...and dump any remaining email purporting to be from me into /dev/null.

7) Mail that doesn't fit the above criteria gets held and the sender is

notified of this. If they respond to this verification message, they

automatically get added to the whitelist. Held email automatically get

dumped when it's a month old.

So far, over the past few hours since I've implemented this, it seems to

be working fine: zero spam (once I tuned #5/#6 a little more), and the

valid messages seem to be coming through just fine. I'm still watching

it carefully to make sure it doesn't blow up in some odd way, but so

far, so good.

In about a month - depending on where I am and a number of other factors

- I just might write this up.  Having to manually go through and

delete 500-1500 emails per day... I'm just totally over that.

Having to manually go through and

delete 500-1500 emails per day... I'm just totally over that.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (16 messages/26.58kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 175 of Linux Gazette, June 2010

2-Cent Tips

2-cent tip: A safer 'rm'

Ben Okopnik [ben at linuxgazette.net]

Sun, 16 May 2010 08:49:40 -0400

----- Forwarded message from "Silas S. Brown" <ssb22 at cam.ac.uk> -----

If you've ever tried to delete Emacs backup files with

rm *~

(i.e. remove anything ending with ~), but you

accidentally hit Enter before the ~ and did "rm *",

you might want to put this in your .bashrc and .bash_profile :

function rm () {

if test "a $*" == "a $(echo *)"; then

echo "If you really meant that, say -f"

else /bin/rm $@; fi }

That way, typing "rm *" will give you a message

telling you to use the -f flag if you really meant it,

but any other rm command will work.

(The "a" in the test is to ensure that any

options for "rm" are not read as options for "test".)

(It's also possible to alias rm to rm -i, but

that's more annoying as it prompts EVERY time,

which is likely to make you habitually type -f

and that could be a bad thing.)

Silas

----- End forwarded message -----

[ Thread continues here (8 messages/14.88kB) ]

2-cent Tip: Message splitter

Ben Okopnik [ben at linuxgazette.net]

Sun, 9 May 2010 15:15:29 -0400

Once in a while, I need to split a mailbox (a.k.a. an mbox-formatted

file) into individual messages. This time, I've come up with a solution

that's going to go into my "standard solutions" file; it works well, and

saves the messages in zero-padded numerical filenames, so that they're

even properly sorted in the filesystem. Enjoy!

awk '{if (/^From /) f=sprintf("%03d",++a);print>>f}' mail_file

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

2 cent tip: Screenshot with a pull-down menu

Prof. Partha [profdrpartha at gmail.com]

Tue, 4 May 2010 19:44:24 -0400

You can do it with our good old GIMP. Go to the screen you want,

click and get that drop down menu. Launch GIMP. The go to File >

Acquire > Screenshot. Set delay to, say, 10 sec. Minimise GIMP and

bring up the screen with your desired pull down menu. GIMP will

make a snapshot of the screen in 10 sec.

I have uploaded a screen shot made this way, just for you to

check out if this is what you want. See

http://www.profpartha.webs.com/snap2.jpg .

Have fun.

--

-------------------------------------------------------------------

Dr. S. Parthasarathy | mailto:profdrpartha at gmail.com

Algologic Research & Solutions |

78 Sancharpuri Colony, Bowenpally | Phone: + 91 - 40 - 2775 1650

Secunderabad 500 011 - INDIA |

WWW-URL:

http://algolog.tripod.com/nupartha.htm

http://www.profpartha.webs.com/

-------------------------------------------------------------------

Talkback: Discuss this article with The Answer Gang

Published in Issue 175 of Linux Gazette, June 2010

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to bytes@linuxgazette.net. Deividson can also be reached via twitter.

News in General

Google Gives Web $120 Million Gift

Google Gives Web $120 Million Gift

At its third Google I/O developer conference, Google announced that it

was releasing the VP8 video codec as free open-source software. The

VP8 video codec was originally developed by On2, a company that Google

acquired in 2009, and is valued at $120 million.

Google has joined with a broad array of companies (Mozilla,

Brightcove, Skype, Opera and others) and web community members to

support WebM, an open web media format project, which will use VP8.

Several partners expressed strong support for the codec, which will be

royalty free, including Mozilla's VP of engineering Mike Shaver.

WebM provides an open and standard video codec which can compete with

the H.264 proprietary codec that Apple and Microsoft are supporting.

H.264 is not currently open-source and patent licensing and/or

royalties may be required.

The WebM open web media format is comprised of:

* VP8, a high-quality video codec;

* Vorbis, an already open source and broadly implemented audio codec;

* a container format based on a subset of the Matroska media container.

According to Google, VP8 results in more efficient bandwidth usage

(lower costs for publishers) and high-quality video for end users. A

developer preview is available at http://www.webmproject.org.

See the WebM FAQ at:

http://www.webmproject.org/about/faq/1999.

In other conference announcements, Google released its Wave wiki

application to the public (anyone can now sign up) and also announced a

business version of its App Engine Platform-as-a-Service. New App Engine

capabilities include managing all the apps in an organization from one

place, simple pricing based on users and applications, premium developer

support, a 99.9% uptime service level agreement, and coming later this

year, access to premium features like cloud-based SQL and SSL. The

SpringSource Tool Suite and Google Web Toolkit are being integrated to

support Java apps on the business App Engine.

More information about Google I/O 2010 is available at

http://code.google.com/events/io/.

Citrix XenClient Delivers Isolated Virtual Desktops "To Go"

Citrix XenClient Delivers Isolated Virtual Desktops "To Go"

At its Synergy 2010 conference on virtualization and cloud computing,

Citrix Systems announced the first public release of Citrix XenClient,

a new client-side virtualization solution. Developed in collaboration

with Intel, it allows centrally managed virtual desktops to run

directly on corporate laptops and PCs, even when they are disconnected

from the network.

A major milestone in the industry, XenClient provides high levels of

performance, security, and isolation through its bare metal

architecture and integration with Intel vPro hardware virtualization

technologies. XenClient Express, a free trial and evaluation kit that

lets IT professionals begin experiencing the benefits of desktop

virtualization for their mobile users, is available for immediate

download.

This development comes at a time when parts of the Open Source

community are edging away from XEN as a hypervisor as KVM gains

support. Integration of the Xen Dom0 code with the Linux kernel has

fallen behind schedule while Citrix has been offering more enterprise

class products and support under the XenServer banner and has been

tweaking up its broad partner infrastructure.

Extending virtual desktops to mobile laptop users requires a portable

local VM-based desktop solution that delivers the benefits of

centralized management and security while fulfilling the great user

experience, mobility and flexibility that users expect from a laptop

device. This approach also allows customers to run more than one

virtual desktop on the same laptop - ideal for companies that want to

maintain a secure corporate desktop for each user, while still giving

employees the freedom to run their own personal desktop and

applications on the same device. Current client-side technologies that

run virtual desktops on top of an existing operating system have not

been able to match these requirements. XenClient, a bare metal

hypervisor which is built on the same virtualization technology as

Citrix XenServer, can offer the control and security that IT demands.

Citrix XenClient was demonstrated in the opening day keynote with

Citrix CEO Mark Templeton as well as in the hands-on learning labs and

show floor at Citrix Synergy 2010 in May.

Note that XenClient needs an Intel i5 or i7 chip, not a Core Duo or an

i3; it requires the new vPRO implementation. However, Citrix execs hint

that other chip architectures will be added slowly, perhaps in 2011.

Key Facts and Highlights:

- XenClient Bare Metal Hypervisor - Based on Citrix XenServer

technology, and leveraging Intel virtualization technology,

XenClient enables each virtual machine to run side-by-side

directly on the hardware, rather than hosted within the installed

operating system. IT can deliver secure, locked down corporate

environments while giving users the flexibility to install

personal applications in a separate virtual machine without

compromising the security of either desktop.

- Receiver for XenClient - Citrix Receiver for XenClient is a

lightweight client that lets users create and manage their own

local virtual desktops, or access centrally managed corporate

virtual desktops.

- Synchronizer for XenClient - Laptops with XenClient can connect

to Synchronizer to download centrally-managed virtual desktops.

Synchronizer enables user data to be backed up automatically

through a secure connection over the internet. With Synchronizer,

IT can define security policies for managed laptops, disable lost

or stolen XenClient laptops and restore a user's virtual desktop

on any XenClient based laptop.

- Availability - XenClient Express, which includes the XenClient

bare metal hypervisor, Citrix Receiver for XenClient and

Synchronizer for XenClient, is freely available for public

download. XenClient express is intended for organizations to trial

small deployments within their organization at no charge.

XenClient is expected to become generally available with the next

release of Citrix XenDesktop later in 2010.

The Linux Foundation Announces LinuxCon Program and Schedule

The Linux Foundation Announces LinuxCon Program and Schedule

The Linux Foundation (LF) has announced the keynote speakers and the

full conference schedule for this year's LinuxCon taking place in Boston

August 10-12. Hot topics - ranging from KVM to Linux's success on the

desktop to MySQL and MariaDB - are among more than 60 sessions focused on

operations, development and business.

Mini-summits will take place during the two days prior to LinuxCon and

include a recently added Cloud Summit. For more details on the

mini-summits, please visit:

.

The LinuxCon schedule includes in-depth technical content for

developers and operations personnel, as well as business and legal

insight from the industry's leaders. LinuxCon sold out when it

premiered in Portland, Oregon, in 2009.

The final LinuxCon program includes sessions that address enterprise

computing as well as controversial topics, including:

* MySQL author Monty Widenius on why he forked with MariaDB.

* Canonical executive Matt Asay speculating on where the Linux

desktop is succeeding;

* Microsoft's Hank Janssen discussing the physics behind the Hyper-V

drivers for Linux;

* Red Hat's Matthew Garrett sharing lessons learned from recent

Andorid/Kernel community discussions.

New keynote additions include:

- Intel & Nokia in a joint keynote titled, "Freedom to Innovate: Can

MeeGo's Openness Change the Mobile Industry?";

- Oracle's Wim Coekaerts, Senior Vice President, Linux and

Virtualization Engineering, will take a technical look at Linux at

Oracle;

- Novell's Senior Vice President and General Manager of Open Platform

Solutions, Markus Rex, will speak about the changing nature of IT

workloads on Linux;

- President of Qualcomm's Innovation Center (QuIC) Rob Chandhok, will

talk about the challenges in open source and mobile today in his

keynote.

These keynotes are in addition to the ones announced earlier this

month, which include the Linux Kernel Roundtable keynote, Virgin

America's CIO Ravi Simhambhatla, the Software Freedom Law Center's

Eben Moglen, the GNOME Foundation's Stormy Peters, and Forrester's

Jeffrey Hammond. For more info, see:

.

Additional highlights includes 60 sessions dispersed across the

operations, development and business tracks, including:

- How We Made Ubuntu Boot Faster;

- KVM: The Latest from the Core Development Team;

- One Billion Files: Scalability Limits in Linux File Systems.

LinuxCon will also feature half-day, in-depth tutorials that include

How to Work with the Linux Development Community, Building Linux

Device Drivers and Using Git, among others.

For more detail on the program and speakers, please visit:

.

To register, please visit:

http://events.linuxfoundation.org/component/registrationpro/?func=details&did=27.

Conferences and Events

- Agile Development Practices West

-

June 6-11, 2010, Caesars Palace, Las Vegas

http://www.sqe.com/agiledevpractices/.

- Internet Week New York

-

June 7-14, 2010, New York, New York

http://internetweekny.com/.

- Southeast LinuxFest

-

June 11-13, 2010, Spartanburg, SC

http://southeastlinuxfest.org.

- Über Conf 2010

-

June 14-17, Denver, CO

http://uberconf.com/conference/denver/2010/06/home.

- Enterprise 2.0 Boston

-

June 15-17, Westin Waterfront, Boston, MA

http://www.e2conf.com/boston/conference.

- BriForum 2010

-

June 15-17, Hilton Hotel, Chicago, IL

http://briforum.com/index.html.

- Fosscon

-

June 19, 2010, Rochester, NY

http://Fosscon.org.

- OpsCamp IT Operations Unconference

-

19th June, 2010, Atlanta, GA, USA

26th June, 2010, Seattle, WA, USA

http://opscamp.org/.

- Semantic Technology Conference

-

June 21-25, Hilton Union Sq., San Francisco, CA

http://www.semantic-conference.com/.

- O'Reilly Velocity Conference

-

June 22-24, 2010, Santa Clara, CA

http://en.oreilly.com/velocity.

- USENIX Federated Conferences Week USENIX ATC, WebApps, FAST-OS, HotCloud, HotStorage '10

-

June 22-25, Sheraton Boston Hotel, Boston, MA

http://usenix.com/events/confweek10/.

- DevOps Day USA 2010

-

June 25, Mountain View, CA

http://www.devopsdays.org/2010-us/registration/.

- CiscoLive! 2010

-

June 27-July 1, Mandalay Bay, Las Vegas, NV

http://www.ciscolive.com/attendees/activities/.

- SANS Digital Forensics and Incident Response Summit

-

July 8-9 2010, Washington DC

http://www.sans.org/forensics-incident-response-summit-2010/agenda.php.

- GUADEC 2010

-

July 24-30, 2010, The Hague, Netherlands.

- Black Hat USA

-

July 24-27, Caesars Palace, Las Vegas, Nev.

http://www.blackhat.com/html/bh-us-10/bh-us-10-home.html.

- First Splunk Worldwide Users' Conference

-

August 9-11, 2010, San Francisco, CA

http://www.splunk.com/goto/conference.

- LinuxCon 2010

-

August 10-12, 2010, Renaissance Waterfront, Boston, MA

http://events.linuxfoundation.org/events/linuxcon.

- USENIX Security '10

-

August 11-13, Washington, DC

http://usenix.com/events/sec10.

- VM World 2010

-

August 30-Sep 2, San Francisco, CA

http://www.vmworld.com/index.jspa.

COSCUP/GNOME.Asia 2010 now calling for papers

COSCUP/GNOME.Asia 2010 now calling for papers

COSCUP is the largest open source conference in Taiwan, and

GNOME.Asia Summit is Asia’s GNOME user and developer conference. The

joint conference will be held in Taipei on August 14 and 15. With a

tagline of "Open Web and Mobile Technologies", it emphasizes the

exciting development in these two areas as well as the GNOME desktop

environment, and leverages the world-leading hardware industry in

Taiwan. The program committee invites open source enthusiasts all

around the world to submit papers via

http://coscup.org/2010/en/programs.

For more details on this joint conference, please visit the websites at

http://coscup.org/

and

http://gnome.asia/.

Distro News

Fedora 13 released with 3D Graphics Drivers

Fedora 13 released with 3D Graphics Drivers

The Fedora Linux distribution is now at version 13, codenamed Goddard.

It brings important platform enhancements and several new desktop

applications plus new open source graphics drivers.

Fedora 12 included experimental 3D support for newer ATI cards in the

free and open source Radeon driver, and now experimental 3D support

has been extended in Fedora 13 to the Nouveau driver for a range of

NVIDIA video cards. Simply install the mesa-dri-drivers-experimental

package to take advantage of this new feature. Support for 3D

acceleration using the radeon driver is no longer considered

experimental with version 13.

Fedora 13 ships with GNOME 2.30, the latest stable version of the

GNOME desktop environment. The new version of GNOME adds a few

noteworthy improvements, such as support for a split-pane view in the

Nautilus file manager and support for Facebook chat in the Empathy

instant messaging client.

Simple Scan is the default scanning utility for Fedora 13. Simple Scan

is an easy-to-use application, designed to let users connect their

scanner and import the image or document in an appropriate format.

The user interface of Anaconda, the Fedora installer, has changed to

handle storage devices and partitioning in an easy and streamlined

manner, with helpful hints in the right places for newbies and

experienced users.

The user account tool has been completely redesigned, and the

accountsdialog and accountsservice test packages are available to make

it easy to configure personal information, make a personal profile

picture or icon, generate a strong passphrase, and set up login

options for your Fedora system.

PolicyKitOne replaces the old deprecated PolicyKit and gives KDE users

a better experience of their applications and desktop in general. The

Fedora 12 KDE Desktop Edition used Gnome Authentication Agent.

PolicyKitOne makes it possible to utilize the native KDE

authentication agent, KAuth in Fedora 13.

Fedora continues its leadership in virtualization technologies with

improvements to KVM such as Stable PCI Addresses and Virt Shared

Network Interface technologies. Having stable PCI addresses will

enable virtual guests to retain PCI addresses' space on a host

machine. The shared network interface technology enables virtual

machines to use the same physical network interface cards (NICs) as

the host operating system. Fedora 13 also enhances performance of

virtualization via VHostNet acceleration of KVM networking.

Fedora now offers the latest version 4 NFS protocol for better

performance, and, in conjunction with recent kernel modifications,

includes IPv6 support for NFS as well. Also new, NetworkManager adds

mobile broadband enhancements to show signal strength; support for

old-style dial-up networking (DUN) over Bluetooth; and command line

support in addition to the improved graphical user interface. Python

3 with enhanced Python gdb debugging support is also included.

Fedora spins are alternate version of Fedora tailored for various

types of users via hand-picked application set or customizations.

Fedora 13 includes four completely new spins in addition to the

several already available, including Fedora Security Lab, Design

Suite, Sugar on a Stick, and Moblin spin.

Download versions of Fedora 13 from:

http://fedoraproject.org/get-fedora.

Lucid Puppy 5.0 out of the kennel

Lucid Puppy 5.0 out of the kennel

A major new version of Puppy Linux, Puppy 5.0, was released in May.

Puppy 5.0, code named "Lupu" and also referred to as "Lucid Puppy" is

built from Ubuntu Lucid Lynx binary packages. It is typically Puppy,

lean and fast, friendly and fun, with some new features. Puppy 5.0

features Quickpet, with many Linux productivity and entertainment

programs, configured and tested, available with one-click. Puppy 5

also introduces choice in browsers: pick one or all and choose the

default.

Lupu boots directly to the desktop and has tools to personalize Puppy.

Language and Locale are easy to set. Kauler's Simple Network Setup is

another of those easy config tools. Updating to keep up with bugfixes

is another one-click wonder. Previous Puppies were all prepared

primarily by Kauler but Puppy 5.0 was a product of the Puppy community

with Mick Amadio, chief developer, and Larry Short, coordinator.

The official announcement and release notes:

http://distro.ibiblio.org/pub/linux/distributions/puppylinux/puppy-5.0/release-500.htm.

The list of packages:

http://www.diddywahdiddy.net/Puppy500/LP5-Release/Lucid_Pup_Packages.

Software and Product News

Oracle Launches Sun Netra 6000 for Telcos

Oracle Launches Sun Netra 6000 for Telcos

Adding to Sun's original carrier-grade servers, Oracle has announced

the Sun Netra 6000 for the communications industry. The introduction

of the Netra 6000 extends Oracle's portfolio for the communications

industry from carrier-grade servers, storage and IT infrastructure, to

mission-critical business and operations support systems and service

delivery platforms.

Leveraging the same advanced features of Oracle's Sun Blade 6000

modular system, including advanced blade networking, simplified

management and high reliability, the Sun Netra 6000 adds carrier-grade

qualities such as Network Equipment Building System (NEBS)

certification and extended lifecycle support. NEBS certification helps

reduce cost and risk and improve time to market for customers, and is

required for telecommunications central office deployments.

The Sun Netra 6000 offers communication service providers (CSPs) and

network equipment providers (NEPs) with a highly-available and

cost-efficient blade system designed for applications like media

services delivery and Operations and Business Support Systems

(OSS/BSS).

The Sun Netra 6000 modular system includes the Sun Netra 6000 AC

chassis and the Sun Netra T6340 with UltraSPARC T2+ processors running

the Solaris operating system. The system can handle demanding

workloads, including multi-threaded Web applications and advanced

IP-based telco Web services. Additionally, it delivers the highest

memory capacity in the industry for blade servers of up to 256 GB.

The Sun Netra 6000 is a modular blade system delivering CSPs and NEPs

high reliability with hot-swappable and hot-pluggable server blades,

blade network, and I/O modules. With on-chip, wire speed

cryptographic support, security comes standard, enabling secure

connections for online transactions and communication.

Opera 10.53 for Linux Now in Beta

Opera 10.53 for Linux Now in Beta

The Opera 10.53 beta for Linux and FreeBSD is now available for

download. In this beta release, Opera has designed a faster, more

feature-rich browser that is tailored for the Linux-platform.

"Linux has always been a priority at Opera, as many people within our

own walls are devoted users," said Jon von Tetzchner, co-founder,

Opera Software. "It was important for us in this release to make

alterations to our terms of usage, in order to make Opera even easier

to distribute on Linux."

The new Carakan JavaScript engine and Vega graphics library make Opera

10.5x more than 8 times faster than Opera 10.10 on tests like

Sunspider.

Private browsing offers windows and tabs that eliminate their browsing

history when closed, ensuring your privacy.

Zooming in and out of Web pages is easier with a new zoom slider and

view controls accessible from the status bar. Widgets are now

installed as normal applications on your computer and can work

separately from the browser.

The user interface for Linux has been reworked, and the new "O" menu

allows access to all features previously available in the menu bar.

The menu bar can be easily reinstated.

No more Qt dependence. Opera's user interface now integrates with

either GNOME/GTK or KDE libraries, depending on the users'

installation.

Better integration with KDE and Gnome desktops and full support for

skinning gives Opera 10.53 for Linux a seamless integration on a wide

variety of different Linux distributions.

New End User License agreement for Opera 10.53 beta for Linux: Opera

10.53 beta is now available for inclusion in software distribution

repositories for wide distribution on Linux and FreeBSD operating

systems. Additionally, all browsers (Desktop, Mini and Mobile) can be

installed in organizations. For example a systems administrator at a

school can install Opera on all the school's PCs.

Download Opera for Linux and FreeBSD at

http://www.opera.com/browser/next/.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

NAT routing with a faulty uplink

By Silas Brown

Faulty uplinks are common, especially if you are using a UMTS (or GPRS)

modem over a mobile phone network. In some cases pppd will

automatically re-establish the connection whenever it goes down, but if it

doesn't you can run a script like this:

export GoogleIp=74.125.127.100

while true; do

if ! ping -s 0 -c 1 -w 5 $GoogleIp >/dev/null

&& ! ping -s 0 -c 1 -w 5 $GoogleIp >/dev/null

&& ! ping -s 0 -c 1 -w 5 $GoogleIp >/dev/null

&& ! ping -s 0 -c 1 -w 5 $GoogleIp >/dev/null; then

echo "Gone down for more than 20secs, restarting"

killall pppd ; sleep 1 ; killall -9 pppd ; sleep 5

# TODO restart pppd here; give it time to start

fi

sleep 10

done

Each ping command send a single empty ICMP packet to Google and

waits up to 5 seconds for a response. Four failures in a row mean the

connection is probably broken so we restart pppd. I use a more

complex version of this script which, if it cannot get connectivity back by

restarting pppd, will play a voice alert over the speakers (as

there is no display on the router machine); the message asks for the modem

to be physically reset. (This message is in Chinese because that's what

I'm learning; it tends to surprise anyone who's visiting me at the time.

See An NSLU2 (Slug)

reminder server in LG #141.)

NAT

Many Linux administrators will be familiar with how to set up a NAT

router using iptables, for connecting other computers on a local

network to the outside world. NAT (Network Address Translation) will not

only forward outgoing IP packets from any of your computers, but will also

keep track of the virtual connections that these packets are making, so it

knows which computer to forward the replies to when they arrive. The basic

way to set up NAT is:

modprobe iptable_nat

iptables -P FORWARD ACCEPT

echo 1 > /proc/sys/net/ipv4/ip_forward

iptables -t nat -F POSTROUTING

iptables -t nat -A POSTROUTING -j MASQUERADE

However, there is a problem with this basic NAT setup: It doesn't cope

at all well if the uplink to the outside world has to change its IP

address.

Stuck connections

If the uplink is broken and re-established, but one of your other

computers continues to send IP packets on a previously-opened connection,

then the kernel's NAT system will try to forward those packets using the

same source port and IP address as it had done before the uplink failed,

and this is not likely to work. Even in the unlikely event that

pppd acquired the same IP address as before, the ISP's router

might still have forgotten enough of the state to break the connections.

One simply cannot assume that already-open connections can continue to be

used after a modem link has been re-established.

The problem is, there may be nothing to tell the applications running on

your other computers that their individual connections need to be dropped

and re-established. Applications on the same computer stand a

chance because the operating system can automatically cut their connections

when the interface (ppp) goes down, but it's not so easy to tell

other computers about what just happened to the interface. Should

any of them try to continue sending IP packets on an old connection, the

packets will be faithfully forwarded by NAT using the old settings, and

probably get nowhere. In the best case, some upstream router will reply

with an ICMP Reject packet which will tell the application something has

gone wrong, but more often than not the packets simply get lost, and your

application will continue to hold onto the opened connection until it

reaches its timeout, which could take very many minutes. (One example of

an application where this is annoying is the Pidgin instant messaging

client. It may look like you're online and ready to receive messages from

your contacts, but those messages won't reach you because Pidgin is holding

onto an old connection that it should have discarded when your uplink was

renewed.)

Clearing the connections

Ideally, it would be nice if the NAT router could, as soon as the

connection is renewed, send a TCP "reset" (RST) packet on all open TCP

connections of all your computers, telling them straight away that these

old connections are no longer useful. Unfortunately, this is not practical

because to send a reset packet you need to know the current TCP "sequence

number" of each connection, and that information is not normally stored by

the NAT lookup tables because NAT doesn't need it for normal operation.

(It is possible to flood your local network with thousands of

reset packets on all possible sequence numbers, for example by using a

packet-manipulation library like Perl's Net::RawIP or a modified

version of the apsend script that uses it, but it takes far too

long to go through all the sequence numbers.)

Unless you patch the kernel to make NAT store the sequence number, the

best you can hope for is to send a reset packet the next time an outgoing

IP packet from the old connection is seen going through your router. This

is normally soon enough, as most applications will at least have some kind

of "keep-alive" mechanism that periodically checks the connection by

sending something on it.

Here is the modified NAT setup script. Besides iptables, you

will need a program called conntrack which is normally available

as a package.

modprobe iptable_nat

iptables -P FORWARD ACCEPT

iptables -F FORWARD

iptables -A FORWARD -m conntrack --ctstate ESTABLISHED -j ACCEPT

iptables -A FORWARD -p tcp --syn -j ACCEPT

iptables -A FORWARD -p tcp -j REJECT --reject-with tcp-reset

conntrack -F

echo 1 > /proc/sys/net/ipv4/ip_forward

iptables -t nat -F POSTROUTING

iptables -t nat -A POSTROUTING -j MASQUERADE

The conntrack -F command tells the kernel to flush (i.e. clear)

its connection-tracking tables, so it doesn't know about old connections

anymore. That by itself is not enough, however, since any further attempt

to send IP packets on these old connections will cause NAT to add them back

into its tables and the packets will still be forwarded; this time they

probably will reach the remote server, but it won't recognise them because

they'll be coming from a different source port (and probably a different IP

address), and if it's not very nice (as many servers aren't because they

have to live in a big bad world where people launch denial-of-service

attacks), it won't bother to respond to these stray packets with ICMP

rejections, so your application still won't know any better.

Therefore, as well as flushing the connection-tracking tables, we add

some filtering rules to the FORWARD queue that tell the kernel to

reject any attempt to send TCP packets, unless it's either making a new

connection (SYN set), or it's on a connection that we know about. (Note

that we do have to specify that a new TCP connection is one that has SYN

set; we can't use the NEW criterion in iptables' conntrack module,

because that will say it's new if it's part of an old connection that just

isn't in the table. For the same reason, we can't use conntrack's

INVALID criterion here.) If the IP packet is not from an established

connection that we know about, then it's probably from a connection that

existed before we flushed the tables, so we reply to it with a reset

packet, which should cause the application to realise that this connection

is no longer working and it should try to make a new one. (Pidgin will

actually prompt the user about this, but if it's left unattended then after

a short time it will answer its own question automatically and

reconnect.)

Non-TCP packets (UDP etc) are not affected by this filter, because it

would be very hard to determine accurately whether they're part of an old

"connection" or a new one. (It's also not possible to send a "reset" packet

outside of TCP, although an ICMP rejection can still be generated. For TCP

connections I'm using reset rather than ICMP-reject because reset seems to

have a more immediate effect, although I haven't proved that properly.)

Thankfully, most Internet applications (particularly the ones that are

likely to run unattended) use TCP at least for their main connections, so

TCP is probably all we need to concern ourselves with here.

All that remains is to arrange for the above NAT script to be re-run

whenever pppd is restarted. That's why it includes the

iptables -F instructions to clear the IP tables before adding

rules to them; if you always start by clearing the table then running the

script multiple times will not cause the tables to become cluttered with

more and more duplicate rules.

Et tu, ISP?

In conclusion I'd like to hazard a guess about some of the cases of

"stuck SSH sessions" that happen even when the uplink in general seems to

be working. Sometimes it seems that new connections work but old

connections are frozen, although nothing ever happened to the uplink (it's

still running and was not restarted). I wonder if in this case some NAT

box at the ISP simply forgot its association table, and has not been

configured to send reset packets as above.

Of course I do set ServerAliveInterval in my

~/.ssh/config to make sure that any idle SSH sessions I have will

periodically send traffic to keep reminding the ISP's NAT boxes I'm still

here so please don't discard my table entry yet. I use the line

ServerAliveInterval 200 in ~/.ssh/config for this.

But sometimes a session can still hang permanently, even while I'm

actively using it, and I have to close its window or press ~. to

quit it, although at the same time any new connections I make work

just fine. Perhaps this happens when some event at the ISP causes a NAT

box to forget its translation table ahead of schedule. It would be nice if

they could use a script like the above to kindly send their customers

TCP-reset packets when this happens, so we're not just left hanging

there.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/brownss.jpg) Silas Brown is a legally blind computer scientist based in Cambridge UK.

He has been using heavily-customised versions of Debian Linux since

1999.

Silas Brown is a legally blind computer scientist based in Cambridge UK.

He has been using heavily-customised versions of Debian Linux since

1999.

Copyright © 2010, Silas Brown. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 175 of Linux Gazette, June 2010

Away Mission - Upcoming in June: SemTech, USENIX, OPS Camp

By Howard Dyckoff

This year, the Semantic Technology conference initiates a long week of

overlapping events. Since its hard to jump across the US in a single

week, you may have to select a single venue.

Semantic Technology, or SemTech, is moving from San Jose to San Francisco,

a cooler clime this time of year. I am looking forward to the change of

venue since it saves me from early morning commutes into Silicon Valley.

Unless you live in the Valley, this should make it easier to visit SemTech,

which is one of the better events to view the state of smart web

technology.

Now in its sixth year, SemTech 2010 features five days of presentations,

panels, tutorials, announcements, and product launches. The focus this

year seems to be on the adoption of Semantic Tech by the government for

Open Government and transparency initiatives as well as business uptake in

a variety of industries. Among the keynote speakers, Dean Allemang, Chief

Technology Consultant at TopQuadrant will speak on the Semantic Web for the

Working Enterprise.

SemTech is run by Wilshire Conferences, which also organizes the Enterprise

Data World Conference and the annual Data Governance Conference as well as

the Semantic Universe publication.

The USENIX Annual Technical Conference comes to Boston the same week and it

shares the site with many sub-conferences such as WebApps '10, a new

technical conference on all aspects of developing and deploying Web

applications. Also happening under the Federated Conferences banner is the

1st USENIX Cloud Virtualization Summit and an Android Developer lab. That's

almost enough to make me travel 3000 miles to check it out. Although

USENIX events tend to be academically-oriented, they also present the very

latest in research and innovation. Its well worth the trip if you live on

the Eastern seaboard.

OPS Camp comes to Seattle the same week and Atlanta the week before.

Please see the other Away Mission article on OPS Camp and the DevOps Day

USA conference on June 25, 2010 in Mountain View, CA. Its all about adding

state-of-the-art software engineering technology to Operations and

Infrastructure management.

Unfortunately, I will not be recommending the Velocity Conference as

O'Reilly has blocked my attendance there and at other O'Reilly events.

But the DevOps Day following that conference will probably discuss the

ideas presented there and that is a free event. Sign up early so you won't

be disappointed.

Finally, the following week brings Cisco Live! to Las Vegas. Since Cisco is

building server racks and expanding its Data Center offerings, this is

another important IT event. Fortunately, you can get some of the content

streamed over the web if you register early. This requires advanced

registration as a "Guest".

So pick an event or two, and enjoy the summer.

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2010, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 175 of Linux Gazette, June 2010

OPS Camp and DevOps Days - coming in June

By Howard Dyckoff

Imagine that all the sysadmin and operations scripts and configuration

files at your data center were organized, rationalized, and set up in

a separate test environment. Imagine you could test changes in an automated

build process. If you can do this, you are a long way toward the goals of

the new Dev Ops or Ops Code movement which wants to abstract infrastructure

and treat it as code.

I attended OPS Camp San Francisco on May 15, 2010, the 3rd OPS Camp event

out of the 5 currently planned for this year. There were about 100 people

there and they were focused on making Operations more like software

engineering.

The first OPS Camp was held in Austin in January and a follow-up OPS Camp

was held in Boston in April. Two more are planned for June and more are

likely in the Fall. There is also a separate DevOps Day in Mountain View,

making for 3 separate events this month. (see below)

Part of the impetus behind OPS Camp is to hold discussions on OPS end of

Cloud Computing without the snake oil and hype or the 50,000-foot overview

slides. Private clouds require the rapid deployment and management of many

standardized components. The Dev part of this means employing the tools

and techniques of software developers: continuous builds, test automation,

version control, Agile techniques.

Another way to think about this is to bridge the differences between new

Development and the stability (and reproducibility) of Operations, a

classic dichotomy.

The point is perhaps best summarized by Ernest Mueller, who blogs on Dev

OPS ideas: "... system administration and system administrators have

allowed themselves to lag in maturity behind what the state of the art is."

DevOps is about bringing operations into the 21st Century.

Attending the San Francisco event were some of the people behind Puppet and

Chef and also several local startups in the Operations sphere. Luke

Kanies, the creator of Puppet, for example, seems to be attending all of

the OPS Camp events. I had a great conversation with Lee Thompson, who

co-leads the on-going DevOps Tool Chain Project that was described at an

afternoon session, and also seems to be attending most of the DevOps

events. And here is information about the project:

http://dev2ops.org/toolchain/

Another key DevOps person and an organizer of the camps is Damon Edwards

who blogs at dev2ops. Here is an excerpt from his blog on the DevOps

handle: (http://dev2ops.org/blog/2010/2/22/what-is-devops.html)

Why the name "DevOps"?

Probably because it's catchy. It's also a good mental image of the concept

at the widest scale -- when you bring Dev and Ops together you get DevOps.

There has been other terms for this idea, such as Agile Operations, Agile

Infrastructure, and Dev2Ops (a term we've been using on this blog since

2007). There is also plenty of examples of people arriving at the idea of

DevOps on their own, without calling it "DevOps". For an excellent example

of this, read this recent post by Ernest Mueller

(http://www.webadminblog.com/index.php/2010/02/17/agile-operations/) or

watch John Allspaw and John Hammond's seminal presentation "10+ Deploys Per

Day: Dev and Ops Cooperation at Flickr" from Velocity 2009

(http://velocityconference.blip.tv/file/2284377/).

For better or for worse, DevOps seems to be the name that is catching

people's imaginations.

The SF event took place in an open loft space with bare beam ceilings in

the same building that housed Bitnami. This was in the shadow of San

Francisco's Giants Stadium on a game day, so parking was an effort. I had

to park 7 blocks away and that was before the 9am start time. On the

other hand, I could have bought a scalped ticket and gone to the game

instead of the afternoon sessions.

There was good WiFi and lots of daisy-chained power cords. We sat on

folding chairs, but in other respects this was a good space with the right

amenities.

The sponsors provided cinnamon rolls and empanadas as registration period

snacks, and provided sandwiches and pizza for lunch, and there was plenty

of coffee.

The format mixed things up a bit. There was an intro to the OPS Camp

format, then a round of 5 minute lightning talks (some of which I wish

were 7 minutes). These included presenters from VMware, rPath, OpsCode, and

other companies in the operations space. As an example, James Watters,

Senior Manager of Cloud Solutions at VMware, doesn't like the "per VM"

scaling model now in use and also spoke about bypassing the 80 Mb/sec data

transfer limit on EC2 at Amazon. That got the audience's interest, but

discussions are only allowed in the main sessions which were later.

So far, the slide decks haven't surfaced at the OPS Camp web site.

Then there was an informal UnPanel with some participants being drafted by

the organizers. These were people with skin in the Operations game who

spoke about their take on the DevOps concept and leading issues. One of the

organizers mentioned going to Cloud Camp and hearing from business types

that Cloud Computing could do away with system administration and local IT

departments. "Not so", he said, the sysadmin function was changing and IT

departments had to adapt to a wild new world.

Then, volunteer presenters listed their topics and explained what the

sessions would be about. After that, all attendees voted on the list and

the rooms and times were allocated.

There were good discussions all around and I gleaned that several people

were combining cfEngine with Subversion for a partial DevOps solution. For

large or complex environments, though, there was a need to choreograph or

sequence the infrastructure build process - and this is an area where the

DevOps Tool Chain may shine.

Be sure to join in the DevOps conversation at the upcoming OPS Camp or the

DevOps Day USA conference on June 25, 2010 in Mountain View, CA. It's the

day after O'Reilly's Velocity 2010 conference. Here's the info link for DevOps Day:

http://www.devopsdays.org/2010-us/programme/

Related materials:

Panel discussion from OpsCamp San Francisco (video):

http://dev2ops.org/blog/2010/5/18/panel-discussion-from-opscamp-san-francisco-video.html

and also:

Q&A: Ernest Mueller on bringing Agile to Operations:

http://dev2ops.org/blog/2010/4/27/qa-ernest-mueller-on-bringing-agile-to-operations.html

Next OpsCamps coming up:

OpsCamp Atlanta, Jun 19, 2010 http://opscamp-atlanta-2010.eventbrite.com/

OpsCamp Seattle - June 26, 2010

http://opscamp-seattle-2010.eventbrite.com/

Also noted for June:

DevOps Day USA conference on June 25, 2010 in Mountain View, CA

http://www.devopsdays.org/2010-us/registration/

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2010, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 175 of Linux Gazette, June 2010

Pixie Chronicles: Part 3 PXE boot

By Henry Grebler

The Story So Far

In Part 1 I outlined my plans: to build a server using network

install. However, I got sidetracked by problems. In Part 2 I made some

progress and dealt with one of the problems. Now I'm going to detail

what I did when I got it right.

Quick Overview

The task is to install Fedora 10 on a machine (the target

machine) which will become a web and email server. I'm not going to

use CDs; I'm going to install from a network. So, somewhere on the

network, I need a machine to supply all the information normally

supplied by CDs or a DVD.

How booting from a disk works

When a machine is (re)started, the BIOS takes control. Typically, a

user configures the BIOS to try various devices in order (DVD/CD,

Floppy, HDD). If there is no removable disk in any drive, the boot

proceeds from the hard drive. The BIOS typically reads the first block

from the hard drive into memory and executes the code it finds

(typically GRUB). This leads to more reading and more executing.

How PXE works

When a machine using PXE is (re)started, it behaves like a DHCP

client. It broadcasts a request on a NIC using the MAC address (aka

Ethernet address) of the NIC as an identifier.

If the machine is recognised, the DHCP server sends a reply. For PXE,

the reply contains the IP address and a file name (the PXE boot Linux

kernel). The PXE client uses tftp to download the specified file into

memory and execute it. This leads to more reading and more executing.

Get the software for the PXE server

You'll need tftp-server, DHCP, syslinux.

I was already running dnsmasq as a DHCP server, so I didn't need to

install anything. There are many packages which can act as DHCP

servers. If you are already running DHCP software, you should be able

to use that.

I didn't have tftp. Getting it on a Fedora machine is just:

yum install tftp-server

PXELINUX is part of the syslinux RPM (which came as part of my

server's Fedora distribution), but it's also available on the

installation images.

Configure DHCP

Since I was already running dnsmasq as a DHCP server, I just needed

to add a couple of lines:

dhcp-host=00:D0:B7:4E:31:1B,b2,192.168.0.60

dhcp-boot=pxelinux.0

These lines say: if the host with MAC address

00:D0:B7:4E:31:1B asks, tell him his hostname is

b2, he should use IP address 192.168.0.60

and tell him to boot the file pxelinux.0 (by

implication, using tftp).

NB I'm using a non-routable IP address from the subnet

192.168.0/24 during the build of the server. Later,

I will configure different IP addresses in preparation

for using this server on a different subnet.

NB Although I can specify a hostname here, in this case it

acts merely as documentation because the install process will

require that the name be specified again.

In other words, at this stage, these are interim values which

may or may not be the same as final values.

Having made changes to the config file, I restart the DHCP server:

/etc/rc.d/init.d/dnsmasq restart

Configure tftp

By default, tftp, which runs from xinetd, is

turned off. Edit /etc/xinetd.d/tftp

< disable = yes

---

> disable = no

Restart xinetd:

/etc/rc.d/init.d/xinetd restart

If you examine /etc/xinetd.d/tftp, you will see that

the default configuration wants to serve /tftpboot. I

don't like to have such directories on my root partition, so I created

/tftpboot as a symlink pointing to the directory

which contained the requisite data.

mkdir /Big/PXEBootServer/tftpboot

ln -s /Big/PXEBootServer/tftpboot /

Note that the use of a symlink keeps the path short. There are

limits; and it means there is less to type.

Either I wasn't thinking too clearly, or perhaps I was concerned that

I might not be able to get the net install to work, because I

downloaded 6 CD images instead of a single DVD. Knowing what I know

now, if I had to do it over, I would download just the DVD image. (The

old machine on which I want to install Fedora 10 only has a CD drive, not

DVD.)

Populate /Big/PXEBootServer/tftpboot:

One of the CD images which comes with Fedora 10 is called

Fedora-10-i386-netinst.iso; it has a copy of

pxelinux.0 and some other needed files.

mount -o loop -r /Big/downloads/Fedora-10-i386-netinst.iso /mnt2

cp /mnt2/isolinux/vmlinuz /mnt2/isolinux/initrd.img /tftpboot

cp /usr/lib/syslinux/pxelinux.0 /tftpboot

(Note: I could also have typed

cp /usr/lib/syslinux/pxelinux.0 /Big/PXEBootServer/tftpboot

but using the symlink saves typing.)

Configure pxelinux

mkdir /tftpboot/pxelinux.cfg

What do we have so far?

ls -lA /tftpboot/.

total 19316

-rw-r--r-- 1 root root 17159894 Nov 20 11:50 initrd.img

-rw-r--r-- 1 root root 13100 Feb 9 2006 pxelinux.0

drwxrwxr-x 3 root staff 4096 Nov 27 15:42 pxelinux.cfg

-rwxr-xr-x 1 root root 2567024 Nov 20 11:50 vmlinuz

cd /tftpboot/pxelinux.cfg

Most of the documentation says to create (touch) a whole

stack of files, but I prefer to make just two: one called

default as a backstop, and one with a name like

01-00-d0-b7-4e-31-1b. What is this?

Get the MAC address of the NIC to be used. This is nowhere near as

easy as it sounds. My PC has 2 NICs. Which is the one to use? It's

probably the one that corresponds to eth0. I

discovered which one was eth0 by first booting into

the Knoppix CD - a very good idea for all sorts of reasons (see Part

2). Once booted into Knoppix, ifconfig eth0 will give

the MAC address. In my case it was 00:40:05:58:81:2F.

Now convert the colons to hyphens and upper- to lower-case:

echo 00:D0:B7:4E:31:1B | tr ':A-Z' '-a-z'

00-d0-b7-4e-31-1b

The required filename is this string preceded by 01- ie

01-00-d0-b7-4e-31-1b.

-

NB Many documents disagree with me. They indicate that the

file name should be in upper-case. Possibly different PXE

clients operate in different ways, but my PXE client

explicitly looks for lower-case.

-

The 01 represents "ARP type 1" ie Ethernet.

-

See /usr/share/doc/syslinux-3.10/pxelinux.doc.

I chose to be very specific. I figured if the net install is

successful, I can use the same technique for other target machines. To

achieve that, each machine would be distinguished by its MAC address.

Consequently, I did not think it was a good idea to use

default for a specific machine. Rather, I configured

default to contain generic parameters to handle the

possible case of an as yet unconfigured machine performing a PXE boot.

default would also handle the case where I got the

MAC address wrong (for example while I was trying to figure if the

other file should be in upper-case or lower-case). Of course, the PXE

boot would behave incorrectly, but at least it would show that there

was some life in the system.

Here are the contents of the /tftpboot/pxelinux.cfg/default:

prompt 1

default linux

timeout 100

label linux

kernel vmlinuz

append initrd=initrd.img ramdisk_size=9216 noapic acpi=off

Readers familiar with GRUB or LILO will recognise this as a boot

config file.

default is a generic config file. I could use it for

the installation, but I'd have to type a lot of extra args at

boot: time, for example

boot: linux ks=nfs:192.168.0.3:/NFS/b2

To save typing and to provide me with documentation and an audit

trail, I've tailored a config file specifically for my current server.

And how better to tie it to the target machine than by the target

machine's MAC address? (In the absence of user intervention, MAC

addresses are unique.)

/tftpboot/pxelinux.cfg/01-00-d0-b7-4e-31-1b:

prompt 1

default lhd

timeout 100

display help.txt

f1 help.txt

f2 help.txt

f3 b2.cfg

# Boot from local disk (lhd = local hard drive)

label lhd

localboot 0

label linux

kernel vmlinuz

append initrd=initrd.img ramdisk_size=9216 noapic acpi=off

label b2

kernel vmlinuz

append initrd=initrd.img ramdisk_size=9216 noapic acpi=off ksdevice=eth0 ks=nfs:192.168.0.3:/NFS/b2

NB It may look ugly, but the append line must be a single

line. The kernel cannot handle any mechanism which attempts to

split the text over more than one line.

See /usr/share/doc/syslinux-3.10/syslinux.doc for

info on the items in the config file.

Please note that this is vastly different from the first file I used

(the one that got me into trouble). By this stage, the config file has

been refined to within an inch of its life.

Here are the little wrinkles that make this config file so much

better:

default lhd

tells the PXE client to use the entries under the label lhd

by default

ksdevice=eth0

forces the install to use eth0 and avoids having anaconda (the

Linux installer) ask the user which interface to use

ks=nfs:192.168.0.3:/NFS/b2

tells anaconda where to find the kickstart file

When the target machine is started, the PXE client will eventually

come to a prompt which says

boot:

If the user presses Enter, the machine will attempt to boot

from the local hard drive. If the user does not respond within 10

seconds (timeout 100 has units of 1/10 of a second),

the boot will continue with the default label (lhd).

Alternatively, the user can enter linux (with or

without arguments) for different behaviour. In truth, I used this

label earlier in the development of this config file; I would lose

little if I now removed this label.

If the user presses function-key 1 or function-key 2 , help is

displayed. If the user presses function-key 3, the actual boot config

file is displayed (on the PXE server b2.cfg is just a

symlink pointing to

/tftpboot/pxelinux.cfg/01-00-d0-b7-4e-31-1b). I added

the f3 entry largely for debugging and understanding

- there's nothing the user can do but choose menu options which are

displayed by help.txt.

This completes this part of the exercise. This is the point at which

you can try out the PXE process.

Try it out

Machines differ and I daresay PXEs differ. I will describe how my

machine behaves and what to expect.

When turned on, my machine, a Compaq Deskpro EP/SB Series,

announces its own Ethernet (aka MAC) address and that it is trying

DHCP. The MAC address indicates which NIC is being used. This machine

will present this MAC address to the DHCP server, which hopefully will

respond with the offer of an IP address. It is also used at PXE

boot time when searching the TFTP directory for its config file.

If the DHCP server responds, then a series of dots appear as my

machine downloads the PXE boot Linux kernel. When the kernel is given

control it announces itself with the prompt boot:.

If you get to this point, then the PXE server has been configured

correctly, and the client and server are working harmoniously. In Part

4 I'll discuss the rest of the install.

It didn't work for me!

You might recall that in Part 1 Lessons from Mistakes I discussed this

subject. I will also confess that the first few times I tried to get

things going, I was not successful.

Here's what to do if things don't behave as expected.

This terminology is misleading. As much as you might like

things to work first time, if you're human, chances are they

don't. Consequently, you should expect things to not work.

(You can feel that you are batting better than average when

they do work.)

Here's what to do when things go wrong. First, the target machine is

in a very primitive state so it is not likely to be of much help.

Since the process under investigation involves a dialogue between 2

machines, you would like to monitor the conversation (A said U1, then

B said U2, then A said U3, ... ). Who was the last to speak? What did

he say? What did I expect him to say?

Ideally, you would have a third machine on the same subnet as the 2

machines (the target machine and the PXE server). But that's unlikely,

and adds very little. Clearly, the place to monitor the conversation

is on the PXE server. Use tcpdump and tshark and/or

ethereal).

As my notes from the exercise say,

This gave me the opportunity to try and try, again and again,

turning on tcpdump and tshark, and fiddling and fiddling until

about the 15th time I got it right.

Here's the expected conversation:

PXE client sends broadcast packet with its own MAC address

PXE server sends BOOTP/DHCP reply

I see the above repeated another 3 times. Then,

PXE client arp enquiry

PXE server arp reply

The arp enquiry comes from an IP address (earlier packets have an IP

address of 0.0.0.0), and confirms that the DHCP server has sent the

client an IP address and that the client has used the value specified.

PXE client sends a RRQ (tftp read request) specifying pxelinux.0

PXE server sends the file

There should be many packets, large ones from the server (containing

the file data); small (length 4) acks from the client.

PXE client sends a RRQ specifying pxelinux.cfg/01-00-d0-b7-4e-31-1b

This is where you find out what exactly your PXE client is asking for.

I guess there are zillions of other ways that things could go wrong,

but the above covers pretty much everything I can think of.

Here's a complete conversation:

12:03:06.208042 IP 0.0.0.0.68 > 255.255.255.255.67: BOOTP/DHCP, Request from 00:d0:b7:4e:31:1b, length: 548

12:03:06.243002 IP 192.168.0.3.67 > 255.255.255.255.68: BOOTP/DHCP, Reply, length: 300

12:03:07.192231 IP 0.0.0.0.68 > 255.255.255.255.67: BOOTP/DHCP, Request from 00:d0:b7:4e:31:1b, length: 548

12:03:07.192641 IP 192.168.0.3.67 > 255.255.255.255.68: BOOTP/DHCP, Reply, length: 300

12:03:09.169864 IP 0.0.0.0.68 > 255.255.255.255.67: BOOTP/DHCP, Request from 00:d0:b7:4e:31:1b, length: 548

12:03:09.170287 IP 192.168.0.3.67 > 255.255.255.255.68: BOOTP/DHCP, Reply, length: 300

12:03:13.125134 IP 0.0.0.0.68 > 255.255.255.255.67: BOOTP/DHCP, Request from 00:d0:b7:4e:31:1b, length: 548

12:03:13.142644 IP 192.168.0.3.67 > 255.255.255.255.68: BOOTP/DHCP, Reply, length: 300

12:03:13.143673 arp who-has 192.168.0.3 tell 192.168.0.60

12:03:13.143716 arp reply 192.168.0.3 is-at 00:01:6c:31:ec:77

12:03:13.143912 IP 192.168.0.60.2070 > 192.168.0.3.69: 27 RRQ "pxelinux.0" octet tsize 0

12:03:13.261195 IP 192.168.0.3.34558 > 192.168.0.60.2070: UDP, length 14

12:03:13.261391 IP 192.168.0.60.2070 > 192.168.0.3.34558: UDP, length 17

12:03:13.261601 IP 192.168.0.60.2071 > 192.168.0.3.69: 32 RRQ "pxelinux.0" octet blksize 1456

12:03:13.284171 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 15

12:03:13.284367 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.309059 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.310453 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.333326 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.334717 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.358162 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.359557 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.381382 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.382769 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.405583 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.406981 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.430128 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.431518 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.453394 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.454782 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.478026 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1460

12:03:13.479420 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.503527 IP 192.168.0.3.34558 > 192.168.0.60.2071: UDP, length 1456

12:03:13.504917 IP 192.168.0.60.2071 > 192.168.0.3.34558: UDP, length 4

12:03:13.517760 IP 192.168.0.60.57089 > 192.168.0.3.69: 63 RRQ "pxelinux.cfg/01-00-d0-b7-4e-31-1b" octet tsize 0 blks

12:03:13.544383 IP 192.168.0.3.34558 > 192.168.0.60.57089: UDP, length 25

12:03:13.544592 IP 192.168.0.60.57089 > 192.168.0.3.34558: UDP, length 4

12:03:13.584227 IP 192.168.0.3.34558 > 192.168.0.60.57089: UDP, length 373

12:03:13.584728 IP 192.168.0.60.57089 > 192.168.0.3.34558: UDP, length 4

12:03:13.584802 IP 192.168.0.60.57090 > 192.168.0.3.69: 38 RRQ "help.txt" octet tsize 0 blksize 1440

12:03:13.615601 IP 192.168.0.3.34559 > 192.168.0.60.57090: UDP, length 25

12:03:13.615803 IP 192.168.0.60.57090 > 192.168.0.3.34559: UDP, length 4

12:03:13.639528 IP 192.168.0.3.34559 > 192.168.0.60.57090: UDP, length 368

12:03:13.640023 IP 192.168.0.60.57090 > 192.168.0.3.34559: UDP, length 4

12:03:18.259021 arp who-has 192.168.0.60 tell 192.168.0.3

12:03:18.259210 arp reply 192.168.0.60 is-at 00:d0:b7:4e:31:1b

/tftpboot/help.txt:

The default is to boot from the local hard drive.

You can enter one of:

lhd (default) boot from local hard drive

linux (legacy) normal (ie manual) install

b2 (hmg addition) kickstart install for b2

ksdevice=eth0

ks=nfs:192.168.0.3:/NFS/b2

f3 display the current boot config file

The final contents of /tftpboot:

ls -lAt /tftpboot/.

total 19328

drwxrwxr-x 3 root staff 4096 Dec 21 2008 pxelinux.cfg

-rw-rw-r-- 1 root staff 364 Dec 7 2008 help.txt

lrwxrwxrwx 1 root staff 33 Dec 7 2008 b2.cfg -> pxelinux.cfg/01-00-d0-b7-4e-31-1b

-rw-r--r-- 1 root root 17159894 Nov 20 2008 initrd.img

-rwxr-xr-x 1 root root 2567024 Nov 20 2008 vmlinuz

-rw-r--r-- 1 root root 13100 Feb 9 2006 pxelinux.0

ls -lAt /tftpboot/pxelinux.cfg/

total 24

-rw-rw-r-- 1 root staff 369 Dec 7 2008 01-00-d0-b7-4e-31-1b

-rw-rw-r-- 1 root staff 123 Nov 26 2008 default

Resources:

http://www.thekelleys.org.uk/dnsmasq/doc.html

http://fedoraproject.org/en/get-fedora

ftp://mirror.optus.net/fedora/linux/releases/10/Fedora/i386/iso/Fedora-10-i386*

References:

http://linux-sxs.org/internet_serving/pxeboot.html

http://www.stanford.edu/~alfw/PXE-Kickstart/PXE-Kickstart-6.html

http://syslinux.zytor.com/wiki/index.php/PXELINUX

http://docs.fedoraproject.org/release-notes/f10preview/en_US/What_is_New_for_Installation_and_Live_Images.html

fedora-install-guide-en_US/sn-automating-installation.html

http://www.linuxdevcenter.com/pub/a/linux/2004/11/04/advanced_kickstart.html

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry has spent his days working with computers, mostly for computer

manufacturers or software developers. His early computer experience

includes relics such as punch cards, paper tape and mag tape. It is

his darkest secret that he has been paid to do the sorts of things he

would have paid money to be allowed to do. Just don't tell any of his

employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 175 of Linux Gazette, June 2010

Maemo vs Android

By Jeff Hoogland

There is no doubt that Linux

will be the dominant player in the mobile market by the end of 2010. This

is largely thanks to Google's Android OS, which has been

appearing on more handsets than I can count the past few months. Android is

not the only mobile Linux operating system (however, it is easily the most

popular) that exists. I have made more than a few posts about my Nokia

N900, which is another mobile device that runs a variation of Linux

known as Maemo.

I recently came into possession of an Android

powered device of my own and I was curious to see what all

the fuss was about. For the last week I have retired my N900 to the back

seat and had my SIM card inserted in my Android powered Kaiser just to see

how Google's mobile operating system handles itself in comparison to Maemo.

I am going to compare and contrast the two on the following key points:

- Hardware Selection - What

hardware do you have for running the operating system on?

- Computing - Does the OS function as you

would expect a computer in 2010 to?

- Phone - Both operating systems dial out, but which functions

as a phone more efficiently?

- Applications - Apps, apps, apps! How is the application

selection on the OS?

- Internet Usage - Our mobile devices are our connection to the WWW, which OS

shines when surfing the web?

Hardware Selection -

There is no argument about this one, one of the most powerful things about

Android is its ability to run on multitude of hand-helds (even hand-helds

it wasn't initially intended for!). Big and small. Capacitive screen and

resistive screen. Slide out keyboard, stationary keyboard, flipping

keyboard, - heck even no keyboard at all! There is an Android device out

there to suit just about everyone's needs.

Hardware Selection 10/10 -

Android Total 10/10

Hardware Selection 10/10 -

Android Total 10/10

Maemo on the other hand currently only resides on the N900. While the N900 is powerful,

unique, and well made hardware there is not much choice in the matter if

you would like a Maemo based hand-held. The N900 is it.

Hardware Selection 6/10 -

Maemo Total 6/10

Hardware Selection 6/10 -

Maemo Total 6/10

Computing -

Our hand-helds are getting more and more powerful. With each new release

they become closer and close to being full blown computers - as such they

require full blown operating systems. Since Android runs on a wide array of

devices I do not think it would be fair to compare performance between the

two operating systems (as your mileage will vary with your hardware),

instead I am going to focus on the aspects of the GUI and how the operating

system handles itself.

In general, Android looks and feels like a (smart) phone. Which isn't bad

depending on what you are looking for. Personally I find multitasking on

Android counterintuitive when compared to multitasking on a full-sized

computer. I say this because when you press your "home" button to get back

to your Android desktop the application you had open has to be reopened

from its launcher icon or by a long press of the home key - not from a

task bar/list of open applications like most operating systems have.

Android provides four desktop spaces on which you can place

widgets/application launchers to your hearts content. Which is a good thing

if you have a lot of applications on your Android device - because once you

start to get a whole lot of them installed, they all get lumped together in

your application selector, making it hard to navigate for the one you want.