...making Linux just a little more fun!

The Mailbag

HELP WANTED : Article Ideas

Submit comments about articles, or articles themselves (after reading our guidelines) to The Editors of Linux Gazette, and technical answers and tips about Linux to The Answer Gang.

Running Apache and AOLServer Together

Running Apache and AOLServer Together

Sun, 15 Jan 2006 18:25:11 +0000

Martin O'Sullivan (

martin_osullivan from vodafone.ie)

Hi Jimmy

this sounds like something you would know the answer to.

Short answer, no. I'm cc:ing the Answer Gang -- someone might have done

something similarly insane once

Running Apache and AOLServer Together

I want to do the following

Run two web sites off one server using two different http daemons

1 Apache listening on port 80

2 AOLServer listening on port 8080

The apache server will be accessed by using url http://s1.com

The AOLServer will be accessed by using url http://s2.com

The Names s1.com and s2.com are pointing at the same IP Address.

If apache gets a request for www.s1.com it deals with it.

If apache gets a request for www.s2.com it says not for me and

Reads its proxy entry, which tells it go to AOLServer on port 8080

The Proxy stuff is working on apache.

Problem apache won't do this for me (I not sure if this scenario is even

possible)

My entry in the apache conf file is as follows.

ProxyRequests Off

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

ProxyPass /s1.com http://s1.com:80/

ProxyPass /s2.com http://s2.com:8080/

Why such a ridiculous set-up (Very long story)

I know about apache Virtual Hosting option but can't use it in this

situation.

Can anyone help?

http://forums.openhosting.com/viewtopic.php?t=105

It has been posted to the following forum

http://www.openacs.org/forums/message-view?message%5fid=361728

http://forums.openhosting.com/viewtopic.php?t=105

Martin O'Sullivan

web: http://martinri.freeshell.org

E-mail martin_osullivan@vodafone.ie

Ultra-cool

Ultra-cool

Tue, 3 Jan 2006 05:55:54 -0500

Benjamin A. Okopnik (

LG Editor)

[Heather] Encountering anything that you find is such a cool Thing To Have (if

you're a linux sort) that you just wish you had three of it for your birthday?

Actually, hearing that somebody not only got some new

at-the-fringe-of-linuxing thing like this, and how they got to using it,

could indeed Make Linux Just A Little More Fun - check out our

Author's FAQ and then send article ideas to articles@ where our articles

team can take a look.

In the "not (yet) Linux" category: this is the cool gadget of the year

- tablet computing on paper. I see some very, very interesting possibilities

in the future for this thing if it gets into the mainstream.

http://www.mobilityguru.com/2005/12/19/pentop_computing_is_more_than_a_kids

http://www.flypentop.com

* Ben Okopnik

[Heather] From the awwwwwwwwwwwwwwwwwwwwww

department:

department:

"This project provides 'executables' that enable you to make your own

soft-toy Linux penguin. To put it straight: You can find sewing

patterns and a community to sew your own soft toy or stuffed Linux

Tux penguin here."

http://www.free-penguin.org

Saw this here: http://www.tenslashsix.com/?p=102 -- scroll down to the

picture of the gadget sitting beside a beer bottle -- another USB

powered mini-server.

It's a Blackdog (http://www.projectblackdog.com), and uses the

Linux-USB Gadget stuff (http://www.linux-usb.org/gadget) to do Ethernet

over USB and USB Mass Storage. It also comes with XMing

(http://freedesktop.org/wiki/Xming) so you can use X from Windows.

[Heather] Anybody wanna try putting a USB gadget-computer inside of Tux and then

using one of those odd pens as a real data entry tool to take good bits

back to your penguin? Glory awaits!

public ntp time server clusters

public ntp time server clusters

Mon, 23 Jan 2006 11:56:41 -0500

Suramya Tomar (

The LG Answer Gang)

Hi Everyone,

Found the following during my one of my aimless wandering sessions on

the web and thought that it was interesting enough to share with you all.

http://www.pool.ntp.org: Public ntp time server for everyone

Quote from Site:

|

...............

The pool.ntp.org project is a big virtual cluster of timeservers

striving to provide reliable easy to use NTP service for millions of

clients without putting a strain on the big popular timeservers.

...............

|

I would have shared my ntp server if I was running one and since one of

the requirements is that your IP has to be a static IP I can't use my

home system to run one and share it (Its IP hasn't changed in a while

but its not static

). But if any of you nice people feel like sharing

then please feel free to join the cluster. I think its a worthwhile project.

). But if any of you nice people feel like sharing

then please feel free to join the cluster. I think its a worthwhile project.

Well this is all for now. Hope you found this useful.

Thanks,

Suramya

tput cwin question

tput cwin question

Sun, 5 Feb 2006 22:12:36 -0700

Mike Wright (

mike.wright from mail.com)

Hi, I have been trying to figure out exactly what the 'cwin' option to the tput

command really means.

What I'm hoping that it will let me do is to create another window in the

current terminal using a section of the screen to output listings from

different commands that will be executed from numbered menu options. And if the

output is several screens then it can be presented a few lines at a time to the

user with the option to page down through the many screens of output while not

disturbing the menu options at the top of the screen nor the status bars at the

bottom of the screen.

If you could provide an example of how to use the 'cwin' option and 'wingo'

option to the tput command I would be so happy. And I would appreciate it very

much. I've read all of the documentation that I have been able to find and

either the documentation for these two options doesn't exist or nobody uses

these two options.

Any help that you can provide with this will be greatly appreciated.

Here is an example of how the screen would look if I could do what I wanted to

do.

Please make your selection:

1. List all VxVM Volumes

2. List all VxVM Disk Groups

3. List all VxVM Disks

4. Quit

Output of command selection # 2

******************************************************************************

*

datadg

*

*

syslogsdg

*

*

inventorydg1

*

*

inventorydg2

*

*

inventorydg3

*

* [ Press the space bar to page down ] *

******************************************************************************

Current Time: 3:19 AM Hostname: server1

F1 DiskSuite F2 VxVM F3 Exit

[Heather] Your kindly editor suspects the borders of this screen have been mangled

by our mailreaders, nonetheless ... it's genuinely interesting stuff. I

recall that a long while back the text form of YaST (SuSE's installer

and admin tool) used tput, but I don't know if this is still the case.

If anyone would like to tackle an article demystifying tput - or even

modern curses programming, for that matter - drop us a line!

Thank you.

Sincerely,

Stale Ruffles

GENERAL MAIL

reader comments to articles

reader comments to articles

Tue, 23 Jan 2006 23:38:07 -0500

Edgar_Howell (

Edgar_Howell from web.de)

Greetings,

Once again a couple of readers have responded with very useful

comments, well worth making available to other readers.

1. CUPS Password

A reader who didn't want public mention let me know that the

following command takes care of my problem with the CUPS password:

lppasswd -a root -g sys

He suggests entering root's password, when asked.

2. Installation Software on External Media

And Dennis Kibbe of the Phoenix Linux Users Group pointed out

that for an additional $10 Dell will in fact provide the WinXP

Installation CD.

To be honest I don't know whether that isn't an option on this

side of the pond or I just didn't know about it. Windows

awareness is not one of my strengths.

Ignoring philosophical and ethical considerations, that certainly

is a far more pragmatic approach and I almost certainly would

have coughed up the $10.

3. Installation Software, part 2

Recently a colleague gave me an article dealing with the problem

of installation media and the suggestion in it might be of interest

to anyone in a similar situation, although how long this might

remain valid is anyone's guess. Note that this addresses the

operating system only, not any other software the manufacturer

of the hardware might have included.

Basically the premise is that all that is needed is the branch "i386"

from either some partition on the hard drive or a "recovery CD".

Inside this directory is a DOS executable, "WINNT.EXE", that installs

the operating system from the other contents of that directory.

Aparently there are complications in the boot process such that

one needs a particular boot program, available on the CDs with earlier

versions, which I of course don't have, so no way to make a

bootable installation medium.

It was also mentioned that this directory should be over 420 MB in

size and contain close to 6000 files. What I found was almost

800 MB and that clearly wouldn't fit on a CD. So I tar'd it

together and pushed it over the network onto another machine.

There I blew away everything under '98 that didn't look like DOS,

changed into the directory "i386" and then started the installer,

WINNT.EXE. This produced a DOS-style screen with the heading

"Windows XP Setup" and a question about the installation directory,

which I left as it was. Another screen or two later it complained

about not finding 593 MB of free disk space and broke off the

installation. But that was really all I wanted to see -- I have

no use for this software anywhere else.

I don't wish on anyone the headache of trying to teach Windows some

manners. But should co-existence become necessary for you, I hope

this information might be of help for however long that situation

may last. And best wishes for a quick recovery.

*** DISCLAIMER ***

This procedure may be illegal where you live!

Here, in Germany, many years ago the courts decided that there is

no inherent relation between software and hardware and that one

can sell a used software license as legitimately as used hardware.

This requires, of course, retaining no copies of the software.

From this it seems reasonably to follow that one may move any

software from any hardware to any other, as long as at no time

the number of valid licenses is exceeded. But prudent data

processing practice dictates at least one back-up copy of all

data and software. However, since over-riding copy-protection and

encryption is a violation of the law here, it almost certainly is

illegal to do this with software so protected.

I'm not a lawyer. You need to know the rules on your own turf.

ech

KUDOS - 1st TIME READER, IMPRESSED!

KUDOS - 1st TIME READER, IMPRESSED!

Fri, 3 Feb 2006 05:07:29 -0600

rion (

rion from mchsi.com)

for the text, your pages d/l quick!

most "help sites" sugar too much..

most "help sites" sugar too much..

i found:

issue76/tag/9.html ;p

sigkill 13 & the 'local' runs only in a func..

i remember reading that man, earlier

..hmm latenight bragging time!

[Heather] Some not linuxy things, snipped.

moreover..

http://linuxgazette.net/issue60/issue60.html#tag/10

is down..

..i had a question bout gpm & blocks of solid color invading my

screen..!!! well, i ctrl-key it, but it makes.. hulk smash.. :sigh:

..i had a question bout gpm & blocks of solid color invading my

screen..!!! well, i ctrl-key it, but it makes.. hulk smash.. :sigh:

:Turns the channel:

i think ill go grab some flashgames or something.. later!

chris

[Heather] Thanks Chris, for taking a moment to write! Have fun gaming!

Slashdotted!

Slashdotted!

Fri, 06 Jan 2006 22:35:16 +0000

Jimmy O'Regan (

The LG Answer Gang)

http://linux.slashdot.org/linux/06/01/06/1539235.shtml?tid=198&tid=106

"From the some-of-this-content-may-be-inappropriate-for-young-readers dept."

Yes, "Benchmarking Linux Filesystems Part II" got us back onto Slashdot,

to a page almost entirely filled with comments bitching about how the

benchmarks were done. (But, it wouldn't be Slashdot...)

Re: "Stepper motor driver for your Linux Computer" (Sreejith

Re: "Stepper motor driver for your Linux Computer" (Sreejith

Sat, 14 Jan 2006 09:04:43 -0500

Jan-Benedict Glaw (

jbglaw from lug-owl.de)

Question by The Answer Gang , sreejithsmadhavan@yahoo.com (tag from lists.linuxgazette.net)

[Ben] Hi, Jan-Benedict!

I'm going to CC this to the author and The Answer Gang

Hi!

I've read that article (http://linuxgazette.net/122/sreejith.html) and

I wonder who reviewed that one.

[Ben] Well, David S. Richardson and I both proofed it for grammatical and

stylistic errors, but unfortunately, I don't think we have anyone on the

proofing team who can do good technical review for C: my own C-relevant

experience, for example, was back in the days of _DOS._ Want to

volunteer?

Uhm, yes, I think so.

[Heather] Hooray!

[Ben]

Great! I'll contact you off-list, then.

The wiring diagrams and timing diagrams for a stepper motor are indeed

correct and helpful, but the module's source code isn't, for numerous

reasons. Even the minimalistic module is plain wrong--nowadays.

Let's look at the source:

* "#define MODULE"

You realized that this is 2.2.x material? We're at 2.6.x right

now!

[Ben]

I note that he did specify that this was for a 2.4.24 kernel. That's

why, when I tried to compile the code and it failed (I'm at 2.6.13.3,

myself), I let it go.

* "#include <asm/uaccess.h>"

We're driving a 8255, that's not rocket science. There's

absolutely no need to write just another driver for a normal PC

parport: there is one already. To be exact, there's a complete

framework to drive anything that has a DB-25 parport connector

outside at the box, irrelevant by what kind of chip this is driven

(thing SGI machines, Amigas, PowerPC, ...). This module would only

work on a PeeCee, nothing else.

* static int pattern[2][8][8]

...isn't even initialized fully. Also, some macros could probably

help making all the numbers readable...

* MOD_INC_USE_COUNT / MOD_DEC_USE_COUNT

Again, this is 2.2.x material and should be dead since long ago.

* Port access to the 8255

This module doesn't register its I/O space, so it could fuck up a

already loaded parport driver.

* Last but not least: it doesn't even work!

...because it doesn't actually access the hardware at all!

[Heather] As noted later, it does work for Sreejith, for a limited case, which

alas, you readers probably don't have.

If this had been published some 6 years ago, it would probably fall

under the "this is a quick'n'dirty hack" rule, but today, I guess

something like that shouldn't be published at all, because it aims

towards quite a wrong direction. Not to imagine that anybody might

think this is good programming style...

Well... if you can show a better way to do this, I'm sure that our

readers would appreciate it - and we'd be glad to publish it as a

followup to the article. Otherwise, I don't know that there's anything

we can do to improve the situation.

Regards,

* Ben Okopnik *

[jra]

Publish his response, and put a tag pointing to it on the original

article?

[thomas]

Well, yes. This is something we've always done.

[Ben]

Yeah, but that's not a fix - just a problem description. I'm trying

for both. Ya know: LG, all about reader participation...

[jra]

True. But even a total failure can always serve as a bad example.

I've got some code around to access the ppdev API. With this, the

module isn't needed at all and thus the resulting application only

consists of simple and portable userland code.

[Ben] I think that, even though the implementation is broken, the underlying

premise of Sreejith's article is valid; our readers would, in my

opinion, find it interesting to see a simple introduction on accessing

hardware by writing a module. Do you think that the article is

salvageable as it is (i.e., is it possible to make the code work -

regardless of whether another tool for it exists or not), or is it just

broken from the start?

@Sreejith: http://lug-owl.de/~jbglaw/software/steckdose/steckdose.c is

some code using the ppdev API to access the parport lines. Maybe you

want to have a look at that? I'd try to write some code driving a

stepper motor in full-step mode, but since I don't have one handy

right now, you'd need to test that.

The code mentioned above drives a shift register connected to a

parport (It is driving some home-made hardware; at the parallel

outputs of the shift register, there are relays switching my computers

on and off. That way I don't need to go down into the cellar to switch

a machine on or off

It is quite simple:

for (each bit to be pushed serially into the shift register) {

put_one_bit_on_the_serial-in_data_line();

rise_clock_line();

lower_clock_line();

}

move_shift-state_into_output_latches();

Because shift registers output their falling-out bit at the end, this

can be fed into the next shift register. That way, I can cascade a

dozens of these chips to control 100 lines.

Maybe something like that could even be presented as an article, but

to date I haven't production-level PCBs, but only hand-made ones.

MfG, JBG

Jan-Benedict Glaw jbglaw@lug-owl.de

[Heather] Ben gets an answer from the article author, with a bit of after-the-fact

concern about the original reasoning, and maybe a bit of self-abashment

about accepting it too close to deadline.

On Wed, Jan 18, 2006 at 02:54:43AM -0800, Sreejith Nair wrote:

Hello sir,

I noticed those serious mistakes in my article.

[Ben] I would have appreciated it if you had said something about them when

submitting it rather than presenting them as useful code, then.

Actually i did

this experiment as my Mini project in the 4th semester of my B Tech course(June

2003). At that time i was using 2.2 kernel and i learned the basics in the

same. But now i am working with Fedora core 1 (2.4.22 -1.2115.nptl). So what

happened is, i just modified the code for the new kernel and rectified the

compilation errors. And now i see....its a mixture of 2.2 and 2.4 things.

But it is a working code. I can control my toy stepper motor

with this code.

[Ben] Unfortunately, it doesn't compile for me - even after I've taken out the

hard-coded kernel location in the INCLUDE line:

ben@Fenrir:/tmp$ make

cc -O2 -DMODULE -D__KERNEL__ -W -Wall -I/usr/src/linux/include -c -o stepper.o stepper.c

stepper.c:1:1: warning: "MODULE" redefined

<command line>:1:1: warning: this is the location of the previous definition

In file included from /usr/src/linux/include/linux/bitops.h:77,

from /usr/src/linux/include/linux/thread_info.h:20,

from /usr/src/linux/include/linux/spinlock.h:12,

from /usr/src/linux/include/linux/capability.h:45,

from /usr/src/linux/include/linux/sched.h:7,

from /usr/src/linux/include/linux/module.h:10,

from stepper.c:3:

/usr/src/linux/include/asm/bitops.h: In function 'find_first_bit':

/usr/src/linux/include/asm/bitops.h:339: warning: comparison between signed and unsigned

[ ~150 similar lines elided ]

make: *** [stepper.o] Error 1

For testing, i was just printing the hex value to the console instead of

writing to

parallel port. For controlling stepper motor, the -printf("%d\n",pattern[i][j]

[k]); -

statement should be replaced with - outb(pattern[i][j][k],LPT_BASE); . Anyway

this is

a serious mistake and I deeply regret and apologize for that.

[Ben] Well, we'll let our readers know not to expect this code to work, then;

publishing this exchange should do it. Fortunately, every cloud has a

silver lining: Jan-Benedict Glaw, who noticed the problem initially, has

agreed to review future C code for us so that this kind of thing doesn't

happen again.

Nowadays, because of my workload, i am not able to explore

"The Linux World" that MUCH!... My intention was not to develop a professional

stepper motor driver as i know that there already exists a parallel port driver

in Linux. I just wanted to share the basics of device driver coding through an

example.

[Ben] Sreejith, may I ask why you wrote this article in the first place?

Actually, never mind: the above question is best treated as rhetorical.

Instead, I'll just pretend that I'm having this exchange with

$AVERAGE_AUTHOR - a fellow who just wrote an article that failed in the

same way as yours did, and from whom I got the same above excuse when I

asked him about it (and to whom, unbeknownst to him, I've slipped a

bunch of truth serum in his morning tea.)

- Me: So, why'd you write this thing?

- AA: So I could get recognition and respect for being a Cool

Kernel-Hacking Dude, of course! Then I'd be able to get all the

chicks! Oooh, look at the pretty colors...

- Me: (making a note to decrease the dosage the next time) So what do

you think is going to happen NOW, after it's become obvious that

your code doesn't work?

- AA: (in a mild panic) Umm, maybe I can make some really good excuses?

Maybe people who believe them will still think I'm cool...

- Me: Uh-huh. And what do you think will happen when your excuses fall

through, and people discover that you not only made a mistake,

but tried to cover it up?

- AA: (full-blown panic, RUN AWAY!!!)

Does this scenario look familiar?

The problem is that there's a "best effort" clause hidden in doing any

kind of work for the Open Source community. The coin of Open Source is

respect - and when you put out less than your best effort, then that

coin might not only be a bit slow in coming, but might not appear at all

- and will disappear instantly when that respect is discovered to be

unearned. Note that I didn't say "will disappear when a mistake is

discovered" - everyone makes mistakes. The deciding factor is in how you

handle having made the mistake: making excuses such as "my intention

was not to develop a professional [application]" destroys the last

possibility of getting paid in that coin, whereas - for example -

admitting the error honestly and taking a few minutes to produce working

code that could be published as a corrective note the following month

would have salvaged some of the credit.

The staff here at LG put out their best effort to edit, format, and

publish the material that is submitted to us; I can guarantee you that

all of it is hard work, but despite that, excuses like "well, it's not a

professional publication" don't have much currency here.

[Heather] We've grown increasingly towards professional traits over the years,

cover art and so on. But we've had a formal editor ever since Marjorie

took over from John Fisk many years ago. Sometimes we fail to edit

as professionally as we'd like - and sometimes what we are aiming for is

a homey, at a real person's desk kind of feeling - and we do it for love

of linux and not the money - but that shouldn't make the best effort a

sad one.

[Ben] By that same

exact token, we expect that our authors - especially those submitting

code - have made their best effort to make sure that their code works

in the current Linux environment, and have done at least some minimal

testing. Perhaps that assumption was unwarranted in this case, for

which I personally apologize to our readers (since I was the one who

accepted the article for publication); however, that is our default

expectation. I don't see how ANY interaction based on respect can imply

anything else.

Just as a relevant technical note, by the way: the 2.6 kernel has been

available for download since 17-Dec-2003 (according to the timestamp of

http://www.kernel.org/pub/linux/kernel/v2.6/linux-2.6.0.tar.bz2) - I see

no reason why an article directed at kernel-related coding would cover

the 2.4 kernel as its primary target.

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://linuxgazette.net *

GAZETTE MATTERS

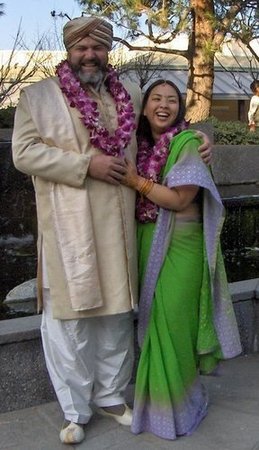

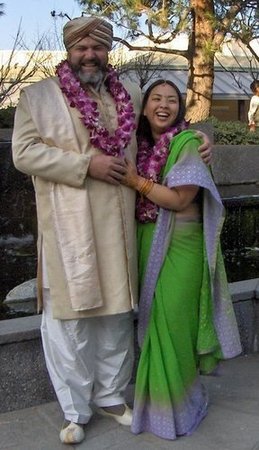

Our Editor In Chief is now married!

Our Editor In Chief is now married!

Sat, 28 Jan 2006 15:17:00 -0800

Heather Stern (

The Answer Gang's Editor Gal)

I'm very pleased to announce that our Editor In Chief, Ben Okopnik, has

married his dear Kat.

The wedding was delightful (give or take getting lost on the way there),

the music was fun, the food was fine, and the nearby outdoor gardens were

pretty, too. Those of us present who did not immediately have to leave

again joined the happy pair afterwards at a nearby restaurant whose chef

is, in my not so humble opinion, rightly famous.

It was great. Go see the Back Page for

a picture of the happy pair.

Rumors that his biggest client paid for the honeymoon turn out to be

halfway well founded in that they invited him to go for pay to the kind

of place that he and his bride would have been delighted to go to

anyway. Smiles from the universe are always appreciated

How to get linux gazette in pdf or ps format

How to get linux gazette in pdf or ps format

Tue, 03 Jan 2006 17:49:18 +0530

Shirish Agarwal (

shirishag75 from gmail.com)

Hi all,

I saw that one can get LG either as a single html or group of

html pages, I would like to have them in a self-contained ps, pdf or

even an openoffice document. The simple reason is that one can do this

off-line more easily. I did try to get this answer from the various

knowledge bases which u have mentioned on the site but wasn't able to

get even close to that. Hope u can help here & maybe also update the

same in the knowledge base for future. Thanx in advance.

Shirish Agarwal

Life is a dream Enjoy it!

Creative Commons, Attribution

Non-commercial, non-derivative

- [Rick Moen]

Well, I notice upon a quick search that you can use:

- http://directory.fsf.org/print/misc/html2pdf.html

[K.-H.]

There is a little commandline tool named "html2ps" which will fetch e.g.

the TWDT page and convert it into a ps file.

for the current linuxgazette this would be:

html2ps -o test.ps -U http://linuxgazette.net/121/TWDT.html

-U is supposed to generate the colored background (e.g. code) but

doesn't do it for me, and -D would generate DSC info so you can go back

and forward.

Using ps2pdf you can convert it again into a pdf file.

Otherwise there is always the print-to-file in your browser which

generates postscript as well (at least in Linux).

Hope that helps you along....

Talkback links

Talkback links

Sat, 14 Jan 2006 09:17:00 -0500

Benjamin A. Okopnik (

LG Editor)

with a few replies by The Answer Gang (tag from lists.linuxgazette.net)

Hey, everyone:

What do you think of the idea of "Talkback" links at the top of each

article? Perhaps something like this:

<a href="mailto:tag@lists.linuxgazette.net?subject='Talkback:<article_name>'">

Discuss this article with The Answer Gang

</a>

It works on several different levels, I think.

[Thomas]

This was trialed for a while when LG was at SSC. It worked relatively

well for some articles, except that it tended to be abused by some (c.f.

my posting to TAG some years ago about someone wanting to know how to

change the oil in their car.)

.

[Rick]

How do you change the oil in your car?

I think it's worth trying, Ben.

Yeah, some clueless

people might use it to piggyback their own question into TAG, but they

can be pointed right back out (i.e., 'read "Asking Questions of The

Answer Gang" at <http://linuxgazette.net/tag/ask-the-gang.html>!'), and

anyone wanting to comment on a specific article can do so easily.

Thoughts?

It wouldn't hurt to try, I suppose. What's the worst that could happen?

At the moment, any specific article feedback is often forwarded to TAG

-- since a lot of it gets sent to the author directly. So yeah... try

it.

-- Thomas Adam

[Pedro Fraile, one of the Answer Gang]

"Talkback" content at the old site did not go to the TAG list, and so

was lost when the site was transformed. With Ben's suggestion, any

useful comment or correction would appear in next month's issue and

would so be preserved.

Yes, I like it.

[Heather] Do you like it too? Let us know! Ok, so it may be a kind of skewed

sample, only people who bother to read all the way to the bottom of the

mailbag are going to answer. But it's a fair question - will it be more

fun to have a fast link at the top? That's what it's all about after

all - Making Linux Just A Little More Fun

This page edited and maintained by the Editors of Linux Gazette

HTML script maintained by Heather Stern of Starshine Technical Services, http://www.starshine.org/

Talkback: Discuss this article with The Answer Gang

Published in Issue 123 of Linux Gazette, February 2006

Re-compress your gzipp'ed files to bzip2 using a Bash script (HOWTO)

By Dave Bechtel

If you were incredibly lucky (like me), perhaps you received an external USB

hard drive for Christmas. Or perhaps you have one lying around already,

with plenty of free space. And perhaps you also read the recent

Slashdot article about compression software and have lots of fairly

sizable gzipped files laying about.

After reading the comments in that article, I was dismayed to learn that my

favorite compression tool of choice (gzip) has no error-correction

capabilities. While I deem it to be the best all-around for quick backups

with a decent compression ratio, gzip will choke if it gets a data error on

restore - and there's something to be said for data integrity.

So, having this nice shiny new USB external drive and some time on my hands, I

wrote a Bash utility script to re-compress gzip files to bzip2, using the

external drive. It takes an order of magnitude longer to compress, but at

least I'll save some space and have a hope of recovering the compressed data

if things go wrong... Right??

My particular external drive is a 120-gig that came factory-formatted as a

single FAT32 partition. Now, any Linux guru worth their salt knows that this

thing practically begs to be customized, since Fat32 has a

2GB(Linux) or 4GB(Windows) filesize limit - depending on who's writing to

it.

So, I fired up my Knoppix HD install and repartitioned it.

Nothing fancy, just good old fdisk.

Here's how it looks now:

$ fdisk -l /dev/sdb

Disk /dev/sdb: 255 heads, 63 sectors, 14593 cylinders

Units = cylinders of 16065 * 512 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 * 1 1 8032 83 Linux

/dev/sdb2 2 14593 117210240 f Win95 Ext'd (LBA)

/dev/sdb5 2 18 136552 82 Linux swap

/dev/sdb6 19 4999 40009882 c Win95 FAT32 (LBA)

/dev/sdb7 5000 5622 5004247 83 Linux

/dev/sdb8 5623 14593 72059557 83 Linux

(I did make a note of the fact that the factory-default was one big type "c",

in case I needed to go back to that.)

Notice the 40GB Fat32 partition. In my other life (sssshhh!) I run Windows

2000 Professional - and was forcibly reminded that everything after Windows

ME has a 32GB partition size limit for formatting Fat32. Note that the

limitation is on formatting - not

accessing - this is by design, and Microsoft has

publically admitted it.

After going through several free Windows tools for formatting and repartitioning

(and running into a brick wall), I eventually gave up on Windows 2000 formatting the

thing. The vendor has a utility on their website to restore the drive to

factory-default partitioning, but that doesn't really help my intended use of

the drive. I could have formatted it in Windows 98, but that's no fun - and it

would need a separate driver for the OS to recognize the drive.

So, rather than give up a perfectly usable 8GB, good old Linux to the rescue

again:

$ mkdosfs -F 32 -v -n wdfat40 /dev/sdb6

and reboot.

Presto! Windows 2000 recognizes the drive just fine now, and it passes all the

chkdsk tests. And for all you dual-booters out there, a wonderful utility

exists called Ext2IFS ( http://www.fs-driver.org/ ). This

allows NT-based systems like Windows 2000 to access ext2/ext3 partitions just

like a regular drive - read/write, so no need for NTFS!

The Linux partitions were formatted like so:

mke2fs -j -c -m1 -v /dev/sdbX

Here are the /etc/fstab entries I created for the drive, BTW:

/dev/sdb6 /mnt/wdfat40 auto

defaults,noauto,noatime,user,suid,noexec,uid=dave 0 0

/dev/sdb7 /mnt/wdlinux ext3 defaults,noauto,noatime,rw 0 0

/dev/sdb8 /mnt/wdvast ext3 defaults,noauto,noatime,rw 0 0

Note the "uid=dave" in that first line. That's so my non-root user account

will have write access to the drive by default.

Now onto the good part - the "rezip" Bash script.

At first, I started out by writing a fairly basic script with a simple

function call and manually-entered filenames. Then I sat down and took

another look at it - and practically rewrote it from scratch, with some

features that occurred to me after several test runs.

rezip Currently Features:

- Uses a simple text file of paths and filenames for input -- so you can save

the results of "find" to a file, run rezip, and the files will be

re-compressed one at a time, with a running log and no user intervention (as

long as there's free space on the destination drive.) Example:

$ find /mnt/bkps -name \*.gz > ~/rezipp-files.txt && rezip

- Automatically sorts the files to process by size, so the biggest files are

last. This allows more work to get done up front. (Believe me, this is a

consideration when your fastest computer is a 900MHz AMD Duron)

- Skips files less than 50MB in size (user defined)

- Recreates existing directory structure on the external drive and leaves the

original .gz file in place

- By default, does not overwrite existing .bz2 files so previous work doesn't

get run over. This feature was added after I found a bug where ^C won't stop

the script right away, and several hours of .bz2 output were lost. :(

Note: if you abort the script and then re-run it, you have to manually

delete the last (partial) .bz2 file it was working on, or that will be skipped

as well. This is where the log comes in handy. :)

- Heavily commented and fairly easy-to-understand (I hope!) source code

- Generates a log file, including start/end times per-file

- ...And last but not least, rezip is released under a GPL license. :)

-- KNOWN BUG(s):

During the course of writing the script, I had hard-coded most of the

defaults, such as the size of files to skip, the log file name, etc. These

were eventually changed to be variables before the script was published for LG

- so that you, the end-user, can have More Control (TM) over its actions. ;-)

I encourage everyone to READ THE SOURCE CODE before running rezip. You may

find it handy to view it in an editor that colorizes or highlights executable

syntax, such as ' mcedit ' or ' jstar '.

Comments, feature requests, bug reports, etc., are welcome.

( Don't forget to ' chmod +x rezip ' and put it somewhere in your $PATH -

/usr/local/bin is suggested. )

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/bechtel.jpg)

Bio: Born in 1972, Dave Bechtel grew up programming in Basic with Apple ][e's,

TI99 4/A, IBM PC (640K!) and a Tandy 1000SX, none of which actually had hard

drives -- 360K floppy only. And we LIKED IT! ;-)

Eventually left BASIC behind, and moved on to programming in REXX and Bash.

Got interested in Linux around 1997. Started with Red Hat and went on to

SuSE, tried several other distros and a *BSD or two, and has now settled on

Knoppix/Debian/Ubuntu, in roughly that order. Currently living in Lake

Zurich, IL.

Likes: Computers, motorcycles, Linux, reading and watching sci-fi (currently

Star Trek TOS, Stargate, and Battlestar Galactica)

Copyright © 2006, Dave Bechtel. Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 123 of Linux Gazette, February 2006

uClinux on Blackfin BF533 STAMP - A DSP Linux Port

By Jesslyn Abdul Salam

Intel and Analog Devices Inc. (ADI) jointly developed the Micro Signal

Architecture (MSA) core and introduced it in December of 2000. ADI's

Blackfin processor functions as a DSP and a microcontroller. This device is

currently used for multimedia applications.

This article provides an introduction to programming the BF533

STAMP board and is intended for readers with some experience in Linux as

well as with embedded devices. It contains a basic description

of using the board for embedded programming as I have done without any

problem.

BF533 STAMP

The STAMP board features:

The STAMP board features:

- ADSP-BF533 500 MHz Blackfin Processor

- 128 MB SDRAM

- 4 MB FLASH memory

- 10/100 Ethernet Controller

- RS232 Serial Interface

- JTAG interface for debugging and flash programming

- I/O connectors for Blackfin Peripherals:

- PPI

- SPORT0 & SPORT1

- SPI

- TIMERS

- IRDA

- TWI

The BF533 STAMP runs uClinux - a Linux port for embedded

devices. It has soft real-time capabilities, meaning that it cannot

guarantee a specific interrupt or scheduler latency.

The STAMP board has been specifically designed to support the

development and porting of open source uClinux applications and includes

the full complement of memory along with serial and network interfaces. The

STAMP uClinux Kernel Board Support Package (BSP) includes a CD with a recent

copy of the open source development tools, the uClinux kernel, and the

documentation. For more details visit http://blackfin.uclinux.org.

uClinux

The uClinux kernel provides a hardware abstraction layer. The uClinux

Application Programming Interface (API) is identical for all processors

which support uClinux, allowing code to be easily ported to a different

processor core. When porting an application to a new platform, programmers

only need to address the areas specific to a particular processor -

normally, this means only the device drivers.

Getting Started

We have to note the following components of our development board:

- an Ethernet controller ( with an RJ45 Ethernet jack )

- a serial port ( DB9 serial connector - female )

- a power jack

The development workstation is essentially a Linux box which should have

- an available serial port

- terminal emulator software

- an Ethernet card

There are two potential interconnections between the workstation and the target:

- the 10 Mbps Ethernet connection

- the RS232 serial interface

Connecting the Hardware

- Connect the serial port to your host computer from the STAMP board

- Connect the Ethernet cable to your network card of your Linux box (

use a straight cable with no cross connections )

- Start the terminal emulation program. Minicom is an example of such a

program, and is included in most Linux distributions. At the bash prompt

type the following command as root:

bash# minicom -m -s /dev/ttyS0

From the configuration menu within Minicom, set the serial port connection to

- 57600 baud

- 8 data bits

- Parity none

- 1 stop bit

- H/W flow control off

Now Save the configuration and choose Exit.

- Connect the DC power cord and power up the board

- After the STAMP board powers up, you should see a U-boot start-up screen

in your terminal window. At this stage, if you allow the boot timer to count

down, the board will load the uClinux kernel from flash memory and boot it,

finally resulting in a command prompt.

GNU/Linux tool chain for the Blackfin devices

Install the binutils and the GCC tools on your machine:

[ Note: 'rpm' is RedHat-specific. Use the appropriate

package manager for your distribution to install the latest 'bfin-gcc'

version; if your distribution does not have one, use the 'alien' utility to

convert this RPM to your required format. -- Ben ]

bash# rpm -ivh bfin-gcc-3.4-4.1.i386.rpm

You may not find the same version of GCC tools on the CD. I had to

download the latest version since the one provided with CD

did not contain the required tools (e.g., the C compiler for building

programs on uClinux.) You may download the RPM from here.

Or you can visit the Blackfin website to download

the toolchain yourself.

In user mode, export the path for the tools:

bash# export PATH=/opt/uClinux/bfin-elf/bin:/opt/uClinux/bfin-uclinux/bin:$PATH

Installing the uClinux Kernel

- Copy the kernel source from the CD to your work directory

- Uncompress the Kernel source

bash# bunzip2 uClinux-dist.tar.bz2

tar -xvf uClinux-dist.tar

Now that the kernel is uncompressed in the work directory, you are ready

to start building your own uClinux kernel for the Blackfin processor.

Simple 'Hello World' Application

Traditionally, development environments and programming languages have

always begun with the 'Hello World' program; we'll follow the precedent.

Copy the following into a file called 'hello.c':

#include<stdio.h>

int main() {

printf("Hello, Welcome to Blackfin uClinux!!\n");

return 0;

}

The next step is to compile 'hello.c' on you host PC:

bash# bfin-uclinux-gcc -Wl,elf2flt hello.c -o hello

The resulting executable is named hello.

Note that the bfin-uclinux-gcc compiler is used; this compiler

is used to compile programs that run on the uClinux operating system. It

automatically links our program with the uClinux run time libraries, which

in turn call the uClinux operating system when required ( for example ) to

print to the console. The compiler collection for the Blackfin processor

also includes another compiler, bfin-elf-gcc, which is used to

compile the actual uClinux operating system and uses a different set of

libraries. If you want to try porting other RTOSs to Blackfin, you will

have to use bfin-elf-gcc.

Now we need to set up the Ethernet port on the Linux box; we'll set its

IP address to 192.168.0.2. After booting the uClinux kernel on the STAMP

board, you will have to configure its Ethernet interface as well; at the

bash prompt of the STAMP, type the following:

root:~># ifconfig eth0 192.168.0.15 up

At this point, the Ethernet link should be up. We can use FTP to

upload the 'hello' program from the host to the board. At the bash shell

prompt of the linux workstation type the following:

bash# ftp 192.168.0.15

Give the username and password as root and uClinux. Send

the 'hello' executable to the STAMP board.

Now modify the permissions and run:

root:~> chown 777 hello

root:~> ./hello

Hello, Welcome to Blackfin uClinux!!

Segmentation faults

The Blackfin processor does not have an MMU, and does not provide any

memory protection for programs. This can be demonstrated with a simple

program:

// test.c

int main ()

{

int *p;

*p=5;

}

Compile 'test.c' using our native C compiler. Try to run the resulting

executable, and you'll end up with a segmentation fault.

Now, compile the program using 'bfin-uclinux-gcc' as I described above.

Send the executable to the STAMP board using FTP, change the file

permissions, and run it.

This time, it should run without a segmentation fault. Due to this, an

unprivileged process can cause an address error, can corrupt kernel code,

and result is obvious. This is not a limitation of the Blackfin

architecture, but simply demonstrates that uClinux is designed to support

devices without an MMU.

End of Part I

This is all I know well about uClinux on Blackfin. I have to look

deeper into uClinux and I shall try to include more next time!

References

For more information, please visit http://blackfin.uclinux.org.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/jesslyn.jpg)

I'm currently doing my final semester as a Btech in Computer Science and

Engineering in MES College of Engineering, Kuttipuram, Kerala, India. I am

really fond of everything about Linux operating systems, maybe because I

want to know more about it, and I spend most of the time on my PC for the

same. My other areas of interest include reading, web surfing, and

listening to music.

Copyright © 2006, Jesslyn Abdul Salam. Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 123 of Linux Gazette, February 2006

A Short Tutorial on XMLHttpRequest()

By Bob Smith

Introduction

This tutorial will show you how to build a web page that responds to

asynchronous events. In five simple exercises, we'll show you the core of

an Asychronous JavaScript And XML (AJAX) application. You'll have to learn

a little JavaScript, but there's no requirement to learn anything about

XML. Our goal is to show you how to build a responsive web application with

a minimum investment of time.

What you should already know: You should be familiar with basic web

page creation and have a general idea of the client/server nature of web

page requests. You should also understand how CGI programs work and

have some experience with CGI programming; we use a few lines of PHP in

our exercises, but you can replace this with C or Perl if you wish.

Requirements for the examples: To do the exercises in this

tutorial, you will need a browser with JavaScript enabled. JavaScript is

on by default in most browsers, so this shouldn't require any action

on your part unless you prevously disabled it. You will also need to have

a web server that supports PHP and CGI, and the ability to upload web pages

to it. You should be ready to go if you already have Apache and PHP

installed on your PC; in fact, our exercises assume that you're using your

PC for the web server.

Application Overview

To illustrate the need for AJAX, let's consider a real-world example:

we've built a telephone answering machine

and we want the caller ID information to be presented on a web page, which

we can view while we are at home or at work. The data flow in our

application looks something like this:

(answering_machine -> syslog -> fifo -> Apache -> web_page)

Our answering machine will log caller ID information via syslog using

LOG_LOCAL3, a facility not used by any other applications on our

system, and a syslog rule in '/etc/syslog.conf' will direct messages

with this facility to a FIFO located in the Apache document directory.

In this tutorial, we will deal with getting the strings from that FIFO to

display on a web page. Writing to a FIFO is the asynchronous part of our

sample application.

Getting Started

In the first exercise, we are going to test the development

environment by running a simple PHP application. Save the following program as

getfifo.php in the document directory of your web server:

<?php

$fp = fopen("ajaxfifo", "r");

if ($fp) {

$ajaxstring = fgets($fp, 128);

fclose($fp);

}

header("Content-Type: text/html");

print($ajaxstring);

?>

Now, create a fifo in the same directory:

mkfifo ajaxfifo

Try to view

getfifo.php using your web browser; it

should hang, waiting for the read of 'ajaxfifo' to complete. Write some

data to the FIFO before the browser times out:

echo "Hello, World" > ajaxfifo

The text that you entered should appear in your browser window.

Continue on to the next exercise if the data was displayed properly.

If the data did not appear, go back and verify that the web server

and PHP are installed and working properly.

DOM in Two Lines of Code

JavaScript is an object-oriented language and treats a displayed web page

as an object. The Document Object Model, or DOM, describes the objects,

properties, and verbs (or methods) available within JavaScript.

By using the DOM, you can name different parts of a web page and can then

perform different actions on those parts.

The following exercise verifies that JavaScript is enabled in your

browser by creating a named region on a web page, and putting some

text into that named region. Copy the following program to hello.html in

the document directory of your web server:

<html>

<head>

<title>Exercise 2: Use DOM to Test JavaScript</title>

<script language=javascript type="text/javascript">

<!-- Hide script from non-JavaScript browsers

// SayHello() prints a message in the document's "hello" area

function SayHello() {

document.getElementById("hello_area").innerHTML="Hello, World";

return;

}

-->

</script>

</head>

<body onload="SayHello()">

<h3><center>Exercise 2: Use DOM to Test JavaScript</center></h3>

</p>

<div id="hello_area">This text is replaced.</div>

</body>

</html>

The above code defines a section of the web page as "hello_area"

and uses the subroutine

SayHello() to put text in that

section. Load

hello.html and verify that your browser's

JavaScript displays "Hello, World" on the web page.

Callbacks in JavaScript

A callback is a subroutine that is called when a particular event

occurs. JavaScript has a fairly complete set of events to which you

can attach a callback. The following program starts a timer using a

callback that is invoked when the page is loaded, and a callback

associated with the timer displays a counter and restarts itself.

Copy

this program to

callback.html in your server's document directory, and verify that

the count is updated in your browser window every two seconds.

<html>

<head>

<title>Exercise 3: A JavaScript Callback Demo</title>

<script language=javascript type="text/javascript">

<!-- Hide script from non-JavaScript browsers

var count;

count = 0;

// Put the current count on the page

function DisplayCount() {

// Put current time in the "count" area of the web page

document.getElementById("count_area").innerHTML=

"The count is: " + count++;

// Schedule next call to DisplayCount

setTimeout("DisplayCount()", 2000);

return;

}

-->

</script>

</head>

<body onload="DisplayCount()">

<h3><center>Exercise 3: A JavaScript Callback Demo</center></h3>

<div id="count_area">This text is replaced.</div>

</body>

</html>

How to use XMLHttpRequest()

XMLHttpRequest is a JavaScript subroutine that lets you make an

HTTP request and attach a callback to the response. Since the

response has a callback, the browser is free to continue responding

to user input. In a way, XMLHttpRequest is to JavaScript what

select() is to C - a way to wait for an event. XMLHttpRequest() is

what puts the 'A' in AJAX.

The following exercise adds only XMLHttpRequest() to the previous

examples. We request the data from getfifo.php using

XMLHttpRequest(), and tie a callback to the arrival of the

response. The response callback displays the data using a named

division per the DOM. Copy the following

code into async.html:

<html>

<head>

<title>Exercise 4: An XMLHttpRequest() Demo</title>

<script language=javascript type="text/javascript">

<!-- Hide script from non-JavaScript browsers

var req_fifo;

// GetAsyncData sends a request to read the fifo.

function GetAsyncData() {

url = "getfifo.php";

// branch for native XMLHttpRequest object

if (window.XMLHttpRequest) {

req_fifo = new XMLHttpRequest();

req_fifo.abort();

req_fifo.onreadystatechange = GotAsyncData;

req_fifo.open("POST", url, true);

req_fifo.send(null);

// branch for IE/Windows ActiveX version

} else if (window.ActiveXObject) {

req_fifo = new ActiveXObject("Microsoft.XMLHTTP");

if (req_fifo) {

req_fifo.abort();

req_fifo.onreadystatechange = GotAsyncData;

req_fifo.open("POST", url, true);

req_fifo.send();

}

}

}

// GotAsyncData is the read callback for the above XMLHttpRequest() call.

// This routine is not executed until data arrives from the request.

// We update the "fifo_data" area on the page when data does arrive.

function GotAsyncData() {

// only if req_fifo shows "loaded"

if (req_fifo.readyState != 4 || req_fifo.status != 200) {

return;

}

document.getElementById("fifo_data").innerHTML=

req_fifo.responseText;

// Schedule next call to wait for fifo data

setTimeout("GetAsyncData()", 100);

return;

}

-->

</script>

</head>

<body onload="GetAsyncData()">

<h3>Exercise 4: An XMLHttpRequest() Demo</h3><p>

<div id="fifo_data"> </div>

</body>

</html>

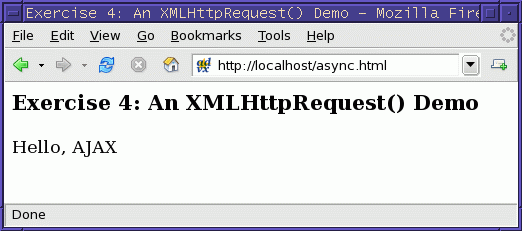

Load

async.html and, if all has gone well, everything sent

to

ajaxfifo will appear in the browser window. For example:

echo "Hello, AJAX" > ajaxfifo

An Appliance Web UI

If you were building an appliance (such as our answering machine),

you'd want your web UI to respond to events occuring in the appliance; the

exercises above show how to do this. But you might also want your

appliance UI to have some controls available to the user. The final

exercise below adds code to the previous exercise to show you how to tie

buttons in a web form to JavaScript subroutines. This exercise

demonstrates that a web UI can wait for asynchronous events and still

be interactive.

Copy async.html to a file called

webui.html and add the following subroutine to the JavaScript

code:

// setColor updates the "color_area" with the color specified

function setColor(new_color) {

color_text = "<table border=2 bgcolor=";

if ("Blue" == new_color) {

color_text += "\"Blue\">"

}

else if ("Red" == new_color) {

color_text += "\"Red\">"

}

else { // shouldn't get here

color_text += "\"Green\">"

}

color_text += "<tr><td>A little color</td></tr></table>";

document.getElementById("color_area").innerHTML=color_text;

}

Add a form to the HTML part of

webui.html:

<body onload="GetAsyncData()">

<h3>Exercise 5: A Trivial Web UI</h3><p>

<div id="fifo_data"> </div>

<p>

<div id="color_area">No color yet.</div><p>

<form>

<input type=button value="Blue" onClick="setColor('Blue')">

<input type=button value="Red" onClick="setColor('Red')">

</form>

</body>

Summary and Notes

In this short tutorial, we've explored the fundamentals for building

responsive, interactive web interfaces using JavaScript and

XMLHttpRequest(). In order to focus on the core concepts, we've ignored

many important details, such as error handling and return codes. Hopefully,

you can add the techniques shown in this article to your web toolbox.

FIFO versus /dev/fanout: Open two browser windows displaying

async.html and write some data into the FIFO; notice that the data

that you write will appear in only one window. This is because the FIFO

passes the data to only one of the readers. In a real application, we

would want to use a fanout device so that the data is written to all

readers. Fanout is described in a previous Linux Gazette

article as well as on the author's home page.

XML: If you are getting only a single piece of data from your

web server, you can use the code in this article. As soon as you ask

for more than one piece of data, you should switch to XML. It does

not need to be difficult.

As an example, let's say that you've modified getfifo.php to use the

caller ID to look up the time and duration of the last time you spoke with

the caller, and you want to return this information along with the

caller ID string. The PHP code to build an XML response for the three

pieces of data might look like this:

<?php

header("Content-Type: text/xml");

print("<?xml version=\"1.0\" ?>\n");

print("<caller_info>\n");

printf("<caller_id>%s</caller_id>\n", "Mary");

printf("<lastcalltime>%s</lastcalltime>\n", "10:24 am");

printf("<lastcalldur>%s</lastcalldur>\n", "12:05");

print("</caller_info>\n");

?>

Instead of using

req_fifo.responseText to get the whole

body of the reply, you would use the following to extract each

of the three fields you want:

callid = req_fifo.responseXML.getElementsByTagName("caller_id");

calltm = req_fifo.responseXML.getElementsByTagName("lastcalltime");

calldur = req_fifo.responseXML.getElementsByTagName("lastcalldur");

callid[0].childNodes[0].nodeValue;

document.getElementById("caller_data").innerHTML=

callid[0].childNodes[0].nodeValue;

document.getElementById("lastcalltime").innerHTML=

calltm[0].childNodes[0].nodeValue;

document.getElementById("lastcalldur").innerHTML=

calldur[0].childNodes[0].nodeValue;

There are simpler and better ways to do this, but the above code

should give you an idea of what to expect.

Further reading: The Apple developer's web site has a

good article on XMLHttpRequest(). You can find it here:

http://developer.apple.com/internet/webcontent/xmlhttpreq.html

You may find the tutorials at the JavaScript Kit web site to be a big

help if you'd like to expand on the knowledge you've gained from this

article. You can find their tutorials here:

http://www.javascriptkit.com/javatutors/

The IBM DeveloperWorks web site has some great articles on AJAX

and XML. We found this one particularly useful:

http://www.ibm.com/developerworks/web/library/wa-ajaxintro1.html

Finally, you might be interested in the low cost answering machine

that's at the heart of this author's appliance:

http://www.linuxtoys.org/answer/answering_machine.html

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/smith.jpg)

Bob is an electronics hobbyist and Linux programmer. He

is one of the authors of "Linux Appliance Design" to be

published by No Starch Press.

Copyright © 2006, Bob Smith. Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 123 of Linux Gazette, February 2006

Configuring Apache for Maximum Performance

By Vishnu Ram V

1 Introduction

Apache is an open-source HTTP server implementation. It is the most

popular web server on the Internet; the December 2005 Web Server Survey

conducted by Netcraft [1] shows that

about 70% of the web sites on Internet are using Apache.

Apache server performance can be improved by adding additional

hardware resources such as RAM, faster CPU, etc. But most of the time,

the same result can be achieved by custom configuration of the server.

This article looks into getting maximum performance out of Apache with

the existing hardware resources, specifically on Linux systems. Of course,

it is assumed that there is enough hardware resources - especially

enough RAM that the server isn't swapping frequently. First two sections

look into various Compile-Time and Run-Time configuration options. The

Run-Time section assumes that Apache is compiled with prefork MPM.

HTTP compression and caching is discussed next. Finally, using separate

servers for serving static and dynamic contents is covered. Basic

knowledge of compiling and configuring Apache and Linux are assumed.

2 Compile-Time Configuration Options

2.1 Load only the required modules:

The Apache HTTP Server is a modular program where the administrator can

choose the functions to be included in the server by selecting a set of

modules [2]. The modules can be compiled either

statically as part of the 'httpd' binary, or as Dynamic Shared Objects

(DSOs). DSO modules can either be compiled when the server is built, or

added later via the apxs

utility, which allows compilation at a later date. The

mod_so module must be statically compiled into the Apache core to

enable DSO support.

Run Apache with only the required modules. This reduces the memory

footprint, which improves the server performance. Statically compiling

modules will save RAM that's used for supporting dynamically loaded

modules, but you would have to recompile Apache to add or remove a module.

This is where the DSO mechanism comes handy. Once the mod_so module is

statically compiled, any other module can be added or dropped using the

'LoadModule' command in the 'httpd.conf' file. Of course, you will have to

compile the modules using 'apxs' if they weren't compiled when the server

was built.

2.2 Choose appropriate MPM:

The Apache server ships with a selection of Multi-Processing Modules (MPMs)

which are responsible for binding to network ports on the machine,

accepting requests, and dispatching children to handle the requests [3]. Only one MPM can be loaded into the

server at any time.

Choosing an MPM depends on various factors, such as whether the OS

supports threads, how much memory is available, scalability versus

stability, whether non-thread-safe third-party modules are used, etc.

Linux systems can choose to use a threaded MPM like worker or

a non-threaded MPM like prefork:

The worker MPM uses multiple child processes. It's multi-threaded within

each child, and each thread handles a single connection. Worker is fast

and highly scalable and the memory footprint is comparatively low. It's

well suited for multiple processors. On the other hand, worker is less

tolerant of faulty modules, and a faulty thread can affect all the

threads in a child process.

The prefork MPM uses multiple child processes, each child handles one

connection at a time. Prefork is well suited for single or double CPU

systems, speed is comparable to that of worker, and it's highly tolerant

of faulty modules and crashing children - but the memory usage is high,

and more traffic leads to greater memory usage.

3 Run-Time Configuration Options

3.1 DNS lookup:

The HostnameLookups directive enables DNS lookup so that hostnames can

be logged instead of the IP address. This adds latency to every request

since the DNS lookup has to be completed before the request is

finished. HostnameLookups is Off by default in Apache 1.3 and above.

Leave it Off and use post-processing program such as logresolve

to resolve IP addresses in Apache's access logfiles. Logresolve

ships with Apache.

When using 'Allow from' or 'Deny from' directives, use an IP address

instead of a domain name or a hostname. Otherwise, a double DNS lookup

is performed to make sure that the domain name or the hostname is not

being spoofed.

3.2 AllowOverride:

If AllowOverride is not set to 'None', then Apache will attempt to open

the .htaccess file (as specified by AccessFileName directive) in each

directory that it visits.

For example:

DocumentRoot /var/www/html

<Directory />

AllowOverride all

</Directory>

If a request is made for URI /index.html, then Apache will attempt

to open /.htaccess, /var/.htaccess, /var/www/.htaccess, and

/var/www/html/.htaccess. These additional file system lookups add to

the latency. If .htaccess is required for a particular directory, then

enable it for that directory alone.

3.3 FollowSymLinks and SymLinksIfOwnerMatch:

If FollowSymLinks option is set, then the server will follow symbolic

links in this directory. If SymLinksIfOwnerMatch is set, then the

server will follow symbolic links only if the target file or directory

is owned by the same user as the link.

If SymLinksIfOwnerMatch is set, then Apache will have to issue

additional system calls to verify whether the ownership of the link and

the target file match. Additional system calls are also needed

when FollowSymLinks is NOT set.

For example:

DocumentRoot /var/www/html

<Directory />

Options SymLinksIfOwnerMatch

</Directory>

For a request made for URI /index.html, Apache will perform lstat()

on /var, /var/www, /var/www/html, and /var/www/html/index.html. These

additional system calls will add to the latency. The lstat results are

not cached, so they will occur on every request.

For maximum performance, set FollowSymLinks everywhere and

never set SymLinksIfOwnerMatch. Or else, if SymLinksIfOwnerMatch is

required for a directory, then set it for that directory alone.

3.4 Content Negotiation:

Avoid content negotiation for fast response. If content negotiation is

required for the site, use type-map files rather than Options

MultiViews directive. With MultiViews, Apache has to scan the directory

for files, which adds to the latency.

3.5 MaxClients:

The MaxClients sets the limit on maximum simultaneous requests that can

be supported by the server; no more than this number of child

processes are spawned. It shouldn't be set too low; otherwise, an

ever-increasing number of connections are deferred to the queue and

eventually time-out while the server resources are left unused. Setting

this too high, on the other hand, will cause the server to start swapping

which will cause the response time to degrade drastically. The appropriate

value for MaxClients can be calculated as:

[4]

MaxClients = Total RAM dedicated to the web server / Max child process size

The child process size for serving static file is about 2-3M. For

dynamic content such as PHP, it may be around 15M. The RSS column

in

"ps -ylC

httpd --sort:rss" shows non-swapped physical memory usage by

Apache processes in kiloBytes.

If there are more concurrent users than MaxClients, the requests will

be queued up to a number based on ListenBacklog directive. Increase

ServerLimit to set MaxClients above 256.

3.6 MinSpareServers, MaxSpareServers, and StartServers:

MaxSpareServers and MinSpareServers determine how many child processes

to keep active while waiting for requests. If the MinSpareServers is too low

and a bunch of requests come in, Apache will have to spawn additional child

processes to serve the requests. Creating child processes is relatively

expensive. If the server is busy creating child processes, it won't be able

to serve the client requests immediately. MaxSpareServers shouldn't be set

too high: too many child processes will consume resources unnecessarily.

Tune MinSpareServers and MaxSpareServers so that Apache need

not spawn more than 4 child processes per second (Apache can

spawn a maximum of 32 child processes per second). When more than 4

children are spawned per second, a message will be logged in the

ErrorLog.

The StartServers directive sets the number of child server

processes created on startup. Apache will continue creating child

processes until the MinSpareServers setting is reached. This doesn't have

much effect on performance if the server isn't restarted frequently. If

there are lot of requests and Apache is restarted frequently, set

this to a relatively high value.

3.7 MaxRequestsPerChild:

The MaxRequestsPerChild directive sets the limit on the number of

requests that an individual child server process will handle. After

MaxRequestsPerChild requests, the child process will die. It's set to 0

by default, the child process will never expire. It is appropriate to set

this to a value of few thousands. This can help prevent memory leakage,

since the process dies after serving a certain number of requests. Don't

set this too low, since creating new processes does have overhead.

3.8 KeepAlive and KeepAliveTimeout:

The KeepAlive directive allows multiple requests to be sent over the

same TCP connection. This is particularly useful while serving HTML

pages with lot of images. If KeepAlive is set to Off, then for each

images, a separate TCP connection has to be made. Overhead due to

establishing TCP connection can be eliminated by turning On KeepAlive.

KeepAliveTimeout determines how long to wait for the next request. Set

this to a low value, perhaps between two to five seconds. If it is set

too high, child processed are tied up waiting for the client when they

could be used for serving new clients.

4 HTTP Compression & Caching

HTTP compression is completely specified in HTTP/1.1. The server

uses either the gzip or the deflate encoding method to the response payload

before it is sent to the client. Client then decompresses the payload.

There is no need to install any additional software on the client side

since all major browsers support these methods. Using compression will save

bandwidth and improve response time; studies have found a mean

gain of %75.2 when using compression [5].

HTTP Compression can be enabled in Apache using the mod_deflate

module. Payload is compressed only if the browser requests compression,

otherwise uncompressed content is served. A compression-aware browser

inform the server that it prefer compressed content through the HTTP

request header - "Accept-Encoding: gzip,deflate". Then the

server responds with compressed payload and the response header set to

"Content-Encoding: gzip".

The following example uses telnet to view request and

response headers:

bash-3.00$ telnet www.webperformance.org 80

Trying 24.60.234.27...

Connected to www.webperformance.org (24.60.234.27).

Escape character is '^]'.

HEAD / HTTP/1.1

Host: www.webperformance.org

Accept-Encoding: gzip,deflate

HTTP/1.1 200 OK

Date: Sat, 31 Dec 2005 02:29:22 GMT

Server: Apache/2.0

X-Powered-By: PHP/5.1.1

Cache-Control: max-age=0

Expires: Sat, 31 Dec 2005 02:29:22 GMT

Vary: Accept-Encoding

Content-Encoding: gzip

Content-Length: 20

Content-Type: text/html; charset=ISO-8859-1

In caching, a copy of the data is stored at the client or in a proxy server

so that it need not be retrieved frequently from the server. This will save

bandwidth, decrease load on the server, and reduce latency. Cache control

is done through HTTP headers. In Apache, this can be accomplished through

mod_expires

and

mod_headers

modules. There is also server side caching, in which the most

frequently-accessed content is stored in memory so that it can be served

fast. The module

mod_cache

can be used for server side caching; it is production stable in Apache

version 2.2.

5 Separate server for static and dynamic content

Apache processes serving dynamic content take from 3MB to 20MB of RAM.

The size grows to accommodate the content being served and never decreases

until the process dies. As an example, let's say an Apache process grows to

20MB while serving some dynamic content. After completing the request, it is

free to serve any other request. If a request for an image comes in, then

this 20MB process is serving static content - which could be served just as

well by a 1MB process. As a result, memory is used inefficiently.

Use a tiny Apache (with minimum modules statically compiled) as the

front-end server to serve static contents. Requests for dynamic content

should be forwarded to the heavy-duty Apache (compiled with all required

modules). Using a light front-end server has the advantage that the static

contents are served fast without much memory usage and only the dynamic

contents are passed over to the big server.

Request forwarding can be achieved by using mod_proxy

and mod_rewrite

modules. Suppose there is a lightweight Apache server listening to port

80 and a heavyweight Apache listening on port 8088. Then the

following configuration in the lightweight Apache can be used to

forward all requests (except requests for images) to the heavyweight server:

[9]

ProxyPassReverse / http://%{HTTP_HOST}:8088/

RewriteEngine on

RewriteCond %{REQUEST_URI} !.*\.(gif|png|jpg)$

RewriteRule ^/(.*) http://%{HTTP_HOST}:8088/$1 [P]

All requests, except for images, will be proxied to the backend server.

The response is received by the frontend server and supplied to

the client. As far as client is concerned, all the responses seem

to come from a single server.

6 Conclusion

Configuring Apache for maximum performance is tricky; there are no hard

and fast rules. Much depends on understanding the web server requirements

and experimenting with various available options. Use tools like ab and httperf to

measure the web server performance. Lightweight servers such as tux or thttpd can also be used as

the front-end server. If a database server is used, make sure it is

optimized so that it won't create any bottlenecks. In

case of MySQL, mtop

can be used to monitor slow queries. Performance of PHP scripts

can be improved by using a PHP caching product such as Turck MMCache.

It eliminates overhead due to compiling by caching the PHP scripts in a

compiled state.

Bibliography

1. http://news.netcraft.com/archives/web_server_survey.html

2. http://httpd.apache.org/docs/2.2/dso.html

3. http://httpd.apache.org/docs/2.2/mpm.html

4. http://modperlbook.org/html/ch11_01.html

5. http://www.speedupyoursite.com/18/18-2t.html

6. http://www.xs4all.nl/~thomas/apachecon/PerformanceTuning.html

7. http://www.onlamp.com/pub/a/onlamp/2004/02/05/lamp_tuning.html

8. http://httpd.apache.org/docs/2.2/misc/perf-tuning.html

9. Linux Server Hacks by Rob Flickenger

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/2002/note.png)

I'm an MTech. in Communication Systems from the IIT Madras. I joined Poornam

Info Vision Pvt Ltd. in 2003 and have been working for Poornam since then.