...making Linux just a little more fun!

Mailbag

This month's answers created by:

[ Ben Okopnik, René Pfeiffer, Rick Moen, Thomas Adam ]

...and you, our readers!

Our Mailbag

[OT] Interesting interview

Jimmy O'Regan [joregan at gmail.com]

Wed, 14 Jan 2009 19:53:43 +0000

(Sorry the subject wasn't more descriptive, but Rick's setup objected

to the subject 'Interview with an adware author': 550-Rejected

subject: Monitoring/spyware software or removal tools spam.)

http://philosecurity.org/2009/01/12/interview-with-an-adware-author

"It was funny. It really showed me the power of gradualism. It's hard

to get people to do something bad all in one big jump, but if you can

cut it up into small enough pieces, you can get people to do almost

anything."

[ Thread continues here (5 messages/7.75kB) ]

Life under Windows these days

Ben Okopnik [ben at linuxgazette.net]

Tue, 13 Jan 2009 22:23:22 -0600

Interesting interview with a former adware blackhat.

http://philosecurity.org/2009/01/12/interview-with-an-adware-author

Quote describing adware wars between competitors:

M: [...] I used tinyScheme, which is a BSD licensed, very small, very

fast implementation of Scheme that can be compiled down into about a 20K

executable if you know what you're doing.

Eventually, instead of writing individual executables every time a worm

came out, I would just write some Scheme code, put that up on the

server, and then immediately all sorts of things would go dark. It

amounted to a distributed code war on a 4-10 million-node network.

S: In your professional opinion, how can people avoid adware?

M: Um, run UNIX.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (3 messages/2.45kB) ]

SugarCRM and badgeware licensing, again

Rick Moen [rick at linuxmafia.com]

Tue, 13 Jan 2009 01:35:16 -0800

By the way, I've just now reminded Bruce Perens of his kind offer, last

February, to let Prof. Moglen know about the latest SugarCRM ploy. I've

stressed that we'd be glad to hear any feedback, if Prof. Moglen has

time. I'll let LG know if/when I hear back.

To correct my below-quoted wording, strictly speaking, SugarCRM's latest

licensing doesn't literally replicate the "_exact same_ badgeware clause"

as in the prior proprietary licence, but it's really close: As written,

the licensing ends up requiring derivative works to display the firm's

trademarked logo or equivalent text (if technology used doesn't support

displaying logos) on _each and every user interface page_ of SugarCRM's

codebase that the derivative uses.

----- Forwarded message from Rick Moen <rick@linuxmafia.com> -----

Date: Mon, 12 Jan 2009 07:52:26 -0800

From: Rick Moen <rick@linuxmafia.com>

To: luv-main@luv.asn.au

Subject: Re: Courier vs Dovecot for IMAP

Quoting Daniel Pittman (daniel@rimspace.net):

> Jason White <jason@jasonjgw.net> writes:

> > Zimbra is distributed under the Yahoo Public Licence, which isn't

> > listed on the OSI Web site.

That's because it's proprietary -- and because Zimbra (now Yahoo)

deliberately avoided submitting it for OSI certification, knowing it

would be denied.

> It is basically the MPL, and reasonably free, from my research.

It's a "badgeware" proprietary licence that deliberately impairs

third-party commercial usage through mandatory advertising notices

forced on third parties at runtime for all derivative works, one of a

series of such licences produced by Web 2.0 / ASP / Software as a Service

companies that carefully avoid seeking OSI licence certification,

because they know they can't get it. Non-free.

In YPL's case, the clause that makes it non-free is 3.2:

3.2 - In any copy of the Software or in any Modification you create,

You must retain and reproduce, any and all copyright, patent, trademark,

and attribution notices that are included in the Software in the same

form as they appear in the Software. This includes the preservation of

attribution notices in the form of trademarks or logos that exist within

a user interface of the Software.

> OTOH, not all components of Zimbra are covered under it, so the whole

> thing is kind of non-free, depending on your tastes.

>

> I believe the entire OS edition is freely available, though.

This is a very typical marketing tactic for badgeware: There is a

"public licence" (badgeware) version to entice new users, that's left

buggy, feature-shy, and poorly documented. If those users then try to

submit bugs, or request fixes, or inquire about customisations, the

sales staff then launches an all-out effort to upsell them to the

"commercial version". (***COUGH*** SugarCRM ***COUGH***).

----- End forwarded message -----

----- Forwarded message from Rick Moen <rick@linuxmafia.com> -----

[ ... ]

[ Thread continues here (1 message/16.42kB) ]

rkhunter problem after upgrading to 1.3.4

J.Bakshi [j.bakshi at icmail.net]

Tue, 20 Jan 2009 20:24:28 +0530

Hello list,

Has one any faced the problem with latest rootkit hunter ( 1.3.4 ) ? I have

upgraded the rootkit hunter to 1.3.4 and after that it reports a huge

warning. I don't know if the Warnings really indicate any hole in my system

or it is just the rootkit hunter it self which creates false alarm. Below is

the scan report. Any idea ?

[ ... ]

[ Thread continues here (2 messages/6.33kB) ]

USB test module

Ben Okopnik [ben at linuxgazette.net]

Tue, 13 Jan 2009 15:13:46 -0600

I'm trying to do something rather abstruse and complex with a weird mix

of software, hardware, and crazy hackery (too long to explain and it

would be boring to most people if I did), but - I need a "magic bullet"

and I'm hoping that somebody here can point me in the right direction,

or maybe toss a bit of code at me. Here it goes: I need a module that

would create a serial-USB device (/dev/ttyUSB9 by preference) and let me

pipe data into it without actually plugging in any hardware.

Is this even possible? Pretty much all of my programming experience is

in the userspace stuff, and beating on bare metal like that is something

I've always considered black magic, so I have absolutely no idea.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (10 messages/16.38kB) ]

Proxy question

Jacob Neal [submacrolize at gmail.com]

Mon, 26 Jan 2009 17:22:08 -0500

Hey...I want to access restricted sites from behind my schools firewall.

Both the computers there and my home computer use linux. I might not be able

to install software on the school computer. What can I do?

[ Thread continues here (7 messages/5.61kB) ]

RETURNED MAIL: DATA FORMAT ERROR

[owner-secretary at dcs.kcl.ac.uk]

Tue, 13 Jan 2009 15:50:30 +0000

This address secretary@dcs.kcl.ac.uk is not in use. Please see the

website http://www.dcs.kcl.ac.uk/ for contact details of the

Department.

[ Thread continues here (2 messages/1.79kB) ]

how to play divx with vlc ?

J.Bakshi [j.bakshi at icmail.net]

Wed, 14 Jan 2009 23:21:56 +0530

Dear all,

Is not vlc has divx support ?

I am runnig debian lenny. The installed vlc is 0.8.6.h-4+lenny2

But when I open an .avi file having divx format the progress bar of vlc only

proceds with out any sound or video. Has any one faced the same problem ?

Is there any fix for this problem ?

One more thing. The sound output for mp3 files are very very low in vlc.

Thanks

[ Thread continues here (3 messages/2.19kB) ]

Virtual Hard Disks

Michael SanAngelo [msanangelo at gmail.com]

Wed, 14 Jan 2009 13:05:20 -0600

Hi, I was wondering what are the possibilities of creating and using virtual

disks for. I understand I can use dd to create it then mkfs.ext3 or

something like it to format the disk. What purpose could they be used for

besides serving as a foundation for creating a live cd?

I want to do this from the cli so no gui.

Thanks,

Michael S.

[ Thread continues here (5 messages/7.98kB) ]

Command-line DVD ripping

Deividson Okopnik [deivid.okop at gmail.com]

Mon, 5 Jan 2009 15:24:21 -0300

Heya

Anyone knows of a good command-line util i can use to rip a dvd to divx?

[ Thread continues here (3 messages/1.19kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 159 of Linux Gazette, February 2009

Talkback

Talkback:158/lg_tips.html

[ In reference to "2-Cent Tips" in LG#158 ]

shirish [shirishag75 at gmail.com]

Sat, 3 Jan 2009 11:55:44 +0530

Hi all,

I read the whole thread at

http://linuxgazette.net/158/misc/lg/2_cent_tip__audio_ping.html but

would like to know how to do two things.

a. How to turn on/make audio beeps louder

b. How do you associate the .wav files so that instead of the audio beeps

I get the .wav file.

Thanx in advance.

--

Regards,

Shirish Agarwal

This email is licensed under http://creativecommons.org/licenses/by-nc/3.0/

http://flossexperiences.wordpress.com

065C 6D79 A68C E7EA 52B3 8D70 950D 53FB 729A 8B17

[ Thread continues here (2 messages/2.77kB) ]

Talkback:158/janert.html

[ In reference to "Gnuplot in Action" in LG#158 ]

Ville Herva [v at iki.fi]

Sun, 11 Jan 2009 17:24:08 +0200

Jesus, that must have been an Ethiopian marathon race - alsmost nobody finishing

after 3:15. 3:15 is not easy - try it if you like. I could understand the

big bunch of people arriving around 2:30 if this was a big country

championship, but if there are amateurs present the big bunch would

definetely arrive between 3:15 and 4 - even after that.

-- v --

v@iki.fi

[ Thread continues here (3 messages/2.93kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 159 of Linux Gazette, February 2009

2-Cent Tips

Apertium 2 cent tip: how to add analysis and generation of unknown words, and *why you shouldn't*

Jimmy O'Regan [joregan at gmail.com]

Thu, 1 Jan 2009 15:27:30 +0000

In my article about Apertium, I promised to follow it up with another

article of a more 'HOWTO' nature. And I've been writing it. And

constantly rewriting it, every time somebody asks how to do something

that I think is moronic, to explain why they shouldn't do that... and

I need to accept that people will always want to do stupid things, and

I should just write a HOWTO.

Anyway... recently, someone asked how to implement generation of

unknown words. There are only two reasons I can think of, why someone

would want this: either they have words in the bilingual dictionary

that they don't have in the monolingual dictionary, or they want to

use it in conjunction with morphological guessing.

In general, the usual method used in Apertium's translators is, if we

don't know the word, we don't try to translate it -- we're honest

about it, essentially. Apertium has an option to mark unknown words,

which we generally recommend that people use. It doesn't cover

'hidden' unknown words, where the same word an be two different parts

of speech--we're looking into how to attempt that. One result of this,

is that before a release, we specifically remove some words from the

monolingual dictionary, if we can't add a translation.

Anyway, in the first case, we generally write scripts to automate

adding those words to the bidix. One plus of this is that it can be

manually checked afterwards, and fixed. Another is that, by adding the

word to the monolingual dictionary, we can also analyse it: we

generally try to make bilingual translators, but sometimes we can only

make a single direction translator--but we still have the option of

adding the other direction later. And, as our translators are open

source, it increases the amount of freely available linguistic data to

do so, so it's a win all round.

The latter case, of also using a mophological guesser, is one source

of some of the worst translations out there. For example, at the

moment, I'm translating a short story by Adam Mickiewicz, which

contains the phrase 'tu i owdzie', which is either a misspelling of

'tu i ówdzie' ('here and there') or an old form, or typesetting

error[1], but in any case, the word 'owdzie' does not exist in the

modern Polish language.

Translatica, the leading Polish-English translator, gave: "here and he

is owdzying"

Now, if I knew nothing of Polish, that would send me scrambling to the

English dictionary, to search for the non-existant verb 'to owdzy'.

(Google gave: "here said". SMT is a great idea, in theory, but in

practice[2] has the potential to give translations that bear no

resemblance to the original meaning of the source text. Google's own

method of 'augmenting' SMT by extracting correlating phrase pairs

based on a pivot language also leads to extra ambiguities[3])

Anyway. The tip, for anyone who still wants to try it

Apetium's dictionaries can have a limited subset of regular

expressions; these can be used by someone who wishes to have both

analysis and generation of unknown words. The <re> tag can be placed

before the <par> tag, so the entry:

[ ... ]

[ Thread continues here (4 messages/13.08kB) ]

2-cent Tip - Stringizing a C statement

Oscar Laycock [oscar_laycock at yahoo.co.uk]

Mon, 5 Jan 2009 14:06:12 +0000 (GMT)

I recently discovered you could "stringize" a whole C++ or C statement with the pre-processor. For example:

#define TRACE(s) cerr << #s << endl; s

or:

#define TRACE(s) printf("%s\n", #s); s

....

TRACE(*p = '\0');

p--;

(I found this in "Thinking in C++, 2nd ed. Volume 1" by Bruce Eckel,

available for free at http://www.mindview.net. By the way, it seems a good

introduction to C++ for C programmers with lots of useful exercises.

There is also a free, but slightly old, version of the official Qt book

(the C++ framework used in KDE), at

http://www.qtrac.eu/C++-GUI-Programming-with-Qt-4-1st-ed.zip. It is a

bit difficult for a C++ beginner, and somewhat incomplete without the

accompanying CD, but rewarding none the less.)

Bruce Eckel adds: "of course this kind of thing can cause problems,

especially in one-line for loops:

for(int i = 0; i < 100; i++)

TRACE(f(i));

Because there are actually two statements in the TRACE( ) macro, the

one-line for loop executes only the first one. The solution is to

replace the semicolon with a comma in the macro."

However, when I try this with a declaration. I get a compiler error:

TRACE(char c = *p);

s.cpp:17: error: expected primary-expression before 'char'

s.cpp:17: error: expected `;' before 'char'

I'm not sure exactly why!?

[ Thread continues here (3 messages/4.81kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 159 of Linux Gazette, February 2009

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to bytes@linuxgazette.net.

News in General

Linux Foundation makes Kernel Developer Ted Ts'o new CTO

Linux Foundation makes Kernel Developer Ted Ts'o new CTO

The Linux Foundation (LF) named Linux kernel developer Theodore Ts'o

to the position of Chief Technology Officer at the Foundation. Ts'o is

currently a Linux Foundation fellow, a position he has been in since

December 2007. He is a highly regarded member of the Linux and open

source community and is known as the first North American kernel

developer. Other current and past LF fellows include Steve Hemminger,

Andrew Morton, Linus Torvalds and Andrew Tridgell.

Ts'o will be replacing Markus Rex, who was on loan to the Foundation

from Novell. Rex will return to Novell as the acting general manager and

senior vice president of Novell's OPS business unit.

As CTO, Ts'o will lead all technical initiatives for the Linux

Foundation, including oversight of the Linux Standard Base (LSB) and

other workgroups such as Open Printing. He will also be the primary

technical interface to LF members and the LF's Technical Advisory

Board, which represents the kernel community.

"I continue to believe in power of mass collaboration and the work that

can be done by a community of developers, users and industry members, I'm

looking forward to translating that power into concrete milestones for the

LSB specifically, and for Linux overall, in the year ahead," says Ts'o.

Since 2001, Ts'o has worked as a senior technical staff member at IBM

where he most recently led a worldwide team to create an

enterprise-level real-time Linux solution. Ts'o was awarded the 2006

Award for the Advancement of Free Software by the Free Software

Foundation (FSF).

Ts'o is also a Linux kernel developer, a role in which he serves as

ext4 file system maintainer, as well as the primary author and

maintainer of e2fsprogs, the userspace utilities for the ext2, ext3,

and ext4 file systems. He is the founder and chair of the annual Linux

Kernel Developers' Summit and regularly presents tutorials on Linux and

other open source software. Ts'o was project leader for Kerberos, a

network authentication system. He was also a member of the Security Area

Directorate for the Internet Engineering Task Force where he chaired

the IP Security (IPSEC) Working Group and was a founding board member

of the Free Standards Group (FSG).

2009 Linux Collaboration Summit Call for Participation

2009 Linux Collaboration Summit Call for Participation

The Linux Foundation has opened registration and announced a call for

participation for the 3rd Annual Collaboration Summit which will take

place April 8-10, 2009 in San Francisco.

Sponsored by Intel in 2009, the Collaboration Summit is an exclusive,

invitation-only gathering of the brightest minds in Linux, including

core kernel developers, distribution maintainers, ISVs, end users,

system vendors, and other community organizations. It is the only

conference designed to accelerate collaboration and encourage

solutions by bringing together a true cross-section of leaders to meet

face-to-face to tackle and solve the most pressing issues facing Linux

today.

The 2009 Collaboration Summit will include:

- the Moblin Development Summit

- High Performance Computing

- Systems Management

- the Linux ISV Summit

LF workgroups such as Open Printing and the LSB will also hold

meetings.

The Collaboration Summit will be co-located with the CELF Embedded Linux

Conference and the Linux Storage and Filesystem Workshop. The winner of the

"We're Linux" video contest (see next item) will also be revealed at the

Summit, where the winning video and honorable mentions will be screened for

the event's attendees.

For more information on the Linux Foundation Collaboration Summit,

please visit:

http://events.linuxfoundation.org/events/collaboration-summit.

To request an invitation, visit:

http://events.linuxfoundation.org/component/registrationpro/?func=details&did=2.

For the first time, the Linux Foundation is inviting all members of the

Linux and open source software communities to submit a proposal for its

Annual Collaboration Summit, its cornerstone event. CFP submissions are due

February 15, 2009. To submit a proposal, visit: http://events.linuxfoundation.org/events/collaboration-summit.

Linux Foundation hosting "We're Linux" Video Contest

Linux Foundation hosting "We're Linux" Video Contest

In January, the Linux Foundation (LF) launched of its grassroots "We're

Linux" video contest. The campaign seeks to find the best user-generated

videos that demonstrate what Linux means to those who use it and inspire

others to try it. The contest is open to everyone and runs through midnight

on March 15, 2009. The winner will be revealed at the Linux Foundation's

Collaboration Summit on April 8, 2009, in San Francisco. The winner will be

awarded a trip to Tokyo, Japan to participate in the Linux Foundation's

Japanese Linux Symposium.

In response to early and resounding community input, the campaign has

been renamed from the original "I'm Linux" to the "We're Linux" video

contest. This name better expresses how Linux is represented by more

than any one person or company.

To become a member of the Linux Foundation's Video forum, view early

submissions, or submit your own video for the "We're Linux"

contest, visit http://video.linuxfoundation.org.

AMD plans dual-core Neo laptop chip

AMD plans dual-core Neo laptop chip

At CES, AMD announced its platform for ultra-thin notebooks at an

affordable price. Previously codenamed "Yukon," the platform is based on

the new AMD Athlon Neo processor, ATI Radeon integrated graphics or Radeon

HD 3410 discrete graphics. The Neo platform debuts within the HP Pavilion

dv2 Entertainment Notebook PC ultra-thin notebook, which is less than one

inch thick and weighs in under four pounds. The HP Pavilion dv2 has a

12.1-inch diagonal LED display, near-full-size keyboard, and an optional

external optical disc drive with Blu-ray capability.

In mid-January AMD also announced a dual-core Athlon Neo processor,

code-named Conesus, which is planned by for mid-2009. The dual-core

Neo and supporting chips provide for more operational capability than

the current crop of netbooks powered by Intel Atom processors.

Grid.org Open Source HPC Community Hits Visitor Milestone

Grid.org Open Source HPC Community Hits Visitor Milestone

Grid.org, the on-line community for open source cluster and grid

software, announced in January that the site garnered over 100,000

unique visitors in 2008, with the highest traffic generating from the

UniCluster, Amazon EC2 and HPC discussion groups.

Launched in November 2007, Grid.org is an open source community for

cluster and grid users, developers, and administrators. It is home to

the UniCluster open source project as well as other open source

projects. For more information, go to http://www.grid.org.

Recent focus on cloud computing and the announcement of UniCloud,

UniCluster's extension into the Amazon EC2 cloud, have been popular

topics resulting in an increase in traffic to the site.

"Having a vibrant community of users and thought leaders is the

cornerstone of any successful open source software project," notes

Gary Tyreman, vice president and general manager of HPC for Univa UD.

"From our groundbreaking work in Amazon EC2 to the UniCloud offering

for Virtual HPC management, Univa UD is committed to initiating and

supporting the future of HPC technology, community and open source."

Conferences and Events

- 2009 SCADA and Process Control Summit

-

February 2 - 3, Disney Dolphin, Lake Buena Vista, FL

http://www.sans.org/scada09_summit.

- JBoss Virtual Experience on-line Conference

-

February 11, 8:30 am - 6:00 pm EST

http://www-2.virtualevents365.com/jboss_experience/agenda.php.

- Black Hat DC 2009

-

February 16 - 19, Hyatt, Arlington, VA

http://blackhat.com/html/bh-dc-09/bh-dc-09-main.html.

- RubyRX Conference

-

February 19 - 21, 2009, Reston, NC

http://www.nfjsone.com/conference/raleigh/2008/02/index.html.

- SCALE 7x 2009

-

February 20 - 22, Westin LAX Hotel, Los Angeles, CA

http://www.socallinuxexpo.org.

- OpenSolaris Storage Summit 2009 - Free

-

February 23, Grand Hyatt, San Francisco, CA

http://wikis.sun.com/display/OpenSolaris/OpenSolaris+Storage+Summit+200902.

- Gartner Mobile & Wireless Summit 2009

-

February 23 - 25, Chicago, IL

http://gartner.com/us/wireless.

- 7th USENIX Conference on File and Storage Technologies (FAST '09)

-

February 24-27, San Francisco, CA

Learn from Leaders in the Storage Industry at the 7th USENIX Conference on

File and Storage Technologies (FAST '09)

Join us in San Francisco, CA, February 24-27, 2009, for the 7th USENIX

Conference on File and Storage Technologies. On Tuesday, February 24, FAST

'09 offers ground-breaking file and storage tutorials by industry leaders

such as Brent Welch, Marc Unangst, Simson Garfinkel, and more. This year's

innovative 3-day technical program includes 23 technical papers, as well as

a Keynote Address, Work-in-Progress Reports (WiPs), and a Poster Session.

Don't miss out on opportunities for peer interaction on the topics that

mean the most to you.

Register by February 9 and save up to $200!

http://www.usenix.org/fast09/lg

- Next-Gen Broadband Strategies

-

February 24, Cable Center, Denver, CO

http://www.lightreading.com/live/event_information.asp?event_id=29032.

- Sun xVM Virtualization Roadshow

-

February 25, various US cities

http://www.xvmgetmoving.com/register.php.

- CSO Perspectives - 2009

-

March 1 - 3, Hilton, Clearwater Beach, FL

http://www.csoonline.com/csoperspectives09.

- eComm 2009

-

March 3 - 5, SF Airport Hyatt, San Mateo, CA

http://ecommconf.com/.

- DrupalCons 2009

-

March 4 - 7, Washington, DC

http://dc2009.drupalcon.org/.

- O'Reilly Emerging Technology Conference

-

March 9 - 12, Fairmont Hotel, San Jose, CA

http://conferences.oreilly.com/etech?CMP=EMC-conf_et09_int&ATT=EM5-NL.

- SD West 2009

-

March 9 - 13, Santa Clara, CA

http://www.sdexpo.com/.

- ManageFusion 09

-

March 10 - 12, MGM Grand Hotel, Las Vegas, NV

http://www.managefusion.com/agenda.aspx.

- VEE 2009 Conference on Virtual Execution Environments

-

March 11 - 13, Crowne Plaza, Washington, DC

http://www.cs.purdue.edu/VEE09/Home.html.

- Orlando Scrum Gathering 2009

-

March 16 - 18, Gaylord Resort, Orlando, FL

http://www.scrumgathering.org.

- Forrester's IT Infrastructure & Operations Forum

-

March 18 - 19, San Diego, CA

http://www.forrester.com/events/eventdetail?eventID=2372.

- EclipseCon 2009

-

March 23 - 26, Santa Clara, CA

http://www.eclipsecon.org/2009/home.

- ApacheCon Europe 2009

-

March 23 - 27, Amsterdam, Nederlands

http://eu.apachecon.com/c/aceu2009.

- USENIX HotPar '09 Workshop on Hot Topics in Parallelism

-

March 30 - 31, Claremont Resort, Berkeley, CA

http://usenix.org/events/hotpar09/.

- International Virtualization and Cloud Computing Conferences

-

March 30 - April 1, Roosevelt Hotel, New York, NY

http://www.virtualizationconference.com/

http://cloudcomputingexpo.com/.

- ESC Silicon Valley 2009 / Embedded Systems

-

March 30 - April 3, San Jose, CA

http://esc-sv09.techinsightsevents.com/.

- Linux Collaboration Summit 2009

-

April 8 - 10, San Francisco, CA

http://events.linuxfoundation.org/events/collaboration-summit.

- RSAConference 2009

-

April 20 - 24, San Francisco, CA

http://www.rsaconference.com/2009/US/Home.aspx.

- USENIX/ACM LEET '09 & NSDI '09

-

The 6th USENIX Symposium on Networked Systems Design & Implementation

(USENIX NSDI '09) will take place April 22–24, 2009, in Boston, MA.

Please join us at The Boston Park Plaza Hotel & Towers for this symposium

covering the most innovative networked systems research, including 32

high-quality papers in areas including trust and privacy, storage, and

content distribution; and a poster session. Don't miss the opportunity to

gather with researchers from across the networking and systems community to

foster cross-disciplinary approaches and address shared research

challenges.

Register by March 30, 2009 to save!

http://www.usenix.org/nsdi09/lg

- STAREAST - Software Testing, Analysis & Review

-

May 4 - 8, Rosen Hotel, Orlando, FL

http://www.sqe.com/go?SE09home.

Distro News

FreeBSD 7.1 Released

FreeBSD 7.1 Released

FreeBSD Release Engineering Team has announced FreeBSD 7.1-RELEASE as

the second release from the 7-STABLE branch. It improves on the

functionality of FreeBSD 7.0 and introduces several new features.

Some of the highlights in 7.1:

- The ULE scheduler is now the default in GENERIC kernels for amd64 and i386 architectures;

- support for using DTrace inside the kernel has been imported from OpenSolaris;

- a new and much-improved NFS Lock Manager (NLM) client;

- boot loader changes allow, among other things, booting from USB devices and booting from

GPT-labeled devices;

- the cpuset(2) system call and cpuset(1) command

have been added, providing an API for thread to CPU binding and CPU

resource grouping and assignment;

- KDE was updated to 3.5.10,

- GNOME was updated to 2.22.3;

- DVD-sized media is now supported for the amd64 and

i386 architectures.

FreeBSD 7.1 is available here: http://www.freebsd.org/where.html.

Ubuntu 8.04.02 Maintenance, 9.04 Alpha 3 releases out

Ubuntu 8.04.02 Maintenance, 9.04 Alpha 3 releases out

Ubuntu 8.04.2 LTS, the second maintenance update to Ubuntu's 8.04 LTS

release, is now available. This release includes updated server,

desktop, and alternate installation CDs for the i386 and amd64

architectures.

In all, over 200 updates have been integrated, and updated

installation media has been provided so that fewer updates will need

to be downloaded after installation. These include security updates

and corrections for other high-impact bugs, with a focus on

maintaining compatibility with Ubuntu 8.04 LTS.

This is the second maintenance release of Ubuntu 8.04 LTS, which will

be supported with maintenance updates and security fixes until April

2011 on desktops and April 2013 on servers.

To get Ubuntu 8.04.2 LTS, visit:

http://www.ubuntu.com/getubuntu/download

The release notes, which document caveats and workarounds for known

issues, are available at:

http://www.ubuntu.com/getubuntu/releasenotes/804

Also, a complete list of post-release updates can also be found at:

https://wiki.ubuntu.com/HardyReleaseNotes/ChangeSummary/8.04.2

In January, the Ubuntu community announced the availability of the

third alpha release of Ubuntu 9.04 - "Jaunty Jackalope". Read the

release announcement and release notes at:

https://lists.ubuntu.com/archives/ubuntu-devel-announce/2009-January/0

00524.html.

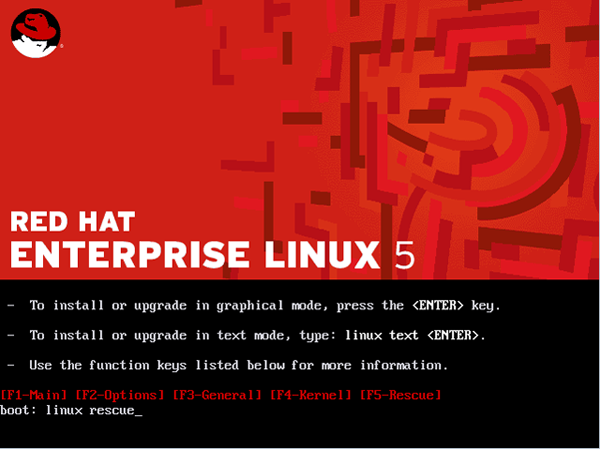

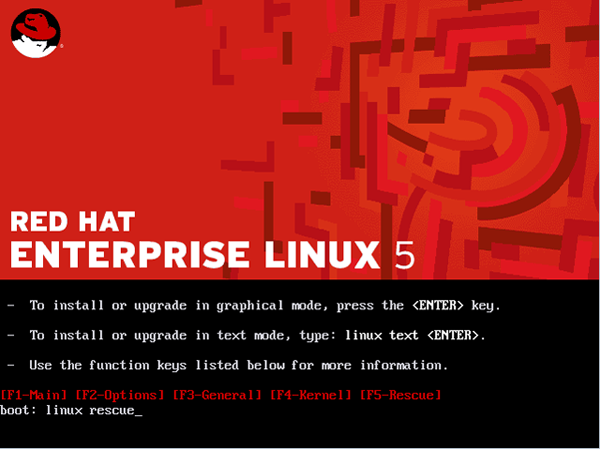

Red Hat Enterprise Linux 5.3 Now Available

Red Hat Enterprise Linux 5.3 Now Available

Red Hat Enterprise Linux 5.3 was released in January. In this third

update to Red Hat Enterprise Linux 5, customers receive a wide range

of enhancements, including increased virtualization scalability,

expanded hardware platform support and incorporation of OpenJDK Java

technologies. Customers with a Red Hat Enterprise Linux subscription

will receive the Red Hat Enterprise Linux 5.3 update from Red Hat

Network.

The primary new features of Red Hat Enterprise Linux 5.3 include:

- Increased scalability of virtualized x86-64 environments: This

includes virtual server support for up to 32 virtual CPUs and 80GB

of memory. Physical server limits have also been expanded to match

the size of the latest hardware systems, with up to 126 CPUs and

1TB main memory. New features, such as support for Hugepage memory

and Intel Extended Page Tables (EPT), also improve the performance

of virtual servers.

- Support for Intel Core i7 (Nehalem) quad-core processors

- Inclusion of OpenJDK: The OpenJDK in Red Hat Enterprise Linux

5.3 is compatible with all applications written for Java SE 6 and

previous versions. OpenJDK is fully supported directly by Red Hat.

Red Hat Enterprise Linux 5.3 also includes enhancements spanning many

other components; Release Notes document over 150 additions and updates.

Solaris Release adds performance and custom storage apps

Solaris Release adds performance and custom storage apps

OpenSolaris release 2008-11 went GA (General Availability release) in

early December and also ended the previous support limitation of 18 months.

This latest version has improved performance and new tools for building

custom storage applications. Sun also released Java 6.update10 with JavaFX

extensions for building interactive applications.

For the desktop, OpenSolaris now includes includes Time Slider, an easy

to use graphical interface that brings ZFS functionality such as instant

snapshots and improved wireless configuration support to all users. To

encourage developers to work on Solaris as a development platform, it now

comes with D-trace probes that run in Firefox.

For developers seeking a pre-installed OpenSolaris notebook, Sun and

Toshiba announced a strategic relationship to deliver OpenSolaris on

Toshiba laptops in 2009. Expect additional announcements in early 2009

about model specifics, pricing, and availability.

Charlie Boyle, Director of OpenSolaris Marketing, spoke with LG just

after the announcement and explained that ZFS and tools like D-Light

(based on D-Trace feature in Solaris) allow seeing what applications

are doing in real time and allow developers to rapidly debug and

optimize their applications. "OpenSolaris is optimized for

performance on the latest systems. Users deploying OpenSolaris will

get the most out of their systems with advances in scalability, power

management, and virtualization."

Regarding the extension of Solaris support beyond 18 months, Boyle

explained, "Customers can get support for as long as they have a

contract on their system. We have removed the artificial 18 month

window... we will take calls on whatever version a customer is running

and get them the best fix for their situation."

In addition to performance gains, other enhancements include:

- a new Automated Installer application, allowing users to decide which

packages to include within the installation Web service;

- the Distro Constructor, enabling users to create their own custom image for

deployment across their systems;

- and a new storage feature, COMSTAR Storage Framework, that allows

developers to create and customize their own open storage server with

OpenSolaris.

Software and Product News

Bridge Education now offering Ubuntu Authorized courses

Bridge Education now offering Ubuntu Authorized courses

Bridge Education (BE) has been selected as the first Ubuntu Authorized

Training Partner in the US. Beginning in February 2009, BE will begin

delivering Ubuntu Authorized courses in select cities nationwide. To

find a class nearest you, please contact BE at 866.322.3262 or visit BE

on the web at http://bridgeme.com.

Eclipse PHP Dev Tools (PDT) 2.0 Released

Eclipse PHP Dev Tools (PDT) 2.0 Released

Fully compliant with Eclipse standards, the new 2.0 release of the PHP

Development Tools (PDT) enables developers to leverage a wide variety

of Eclipse projects, such as Web Tools Project (WTP) and Dynamic

Language Toolkit (DLTK), for faster and easier PHP development. PDT is

an open source development tool that provides the code editing

capabilities needed to get started developing PHP applications.

Version 2.0 was available in January.

To support the object-oriented features of PHP, PDT 2.0 now includes:

- Type Hierarchy view that navigates object-oriented PHP code

faster and more easily.

- Type and method navigation that allows for easy searching of PHP

code based on type information.

- Override indicators that visually tag PHP methods that have been

overridden.

More info is available here:

http://www.eclipse.org/projects/project_summary.php?projectid=tools.pdt

The Java Source Helper plugin was also released in January. Java

Source Helper shows a block of code related to a closing brace/bracket

if it is currently out of visible range in the editor. The Eclipse

Java editor will check to see if the related starting code bracket is

out of visible range and, if it is, it will float a window that shows

the code related to that starting block. This is useful in deeply

nested code. The feature is similar to one in the IntelliJ IDEA IDE.

Cisco's Aironet 1140 Innovates 80.11n Access Points

Cisco's Aironet 1140 Innovates 80.11n Access Points

Cisco is now offering an enterprise-class next-generation wireless

access point that combines full 802.11n performance with

cost-effectiveness. The new Cisco Aironet 1140 Series Access Point

supports the Wi-Fi Certified 802.11n Draft 2.0 access point standard

and is designed for high-quality voice, video, and rich media across

wireless networks.

Cisco delivered the first enterprise-class Wi-Fi Certified 802.11n

Draft 2.0 platform in 2007. The Aironet 1140 offers full 802.11n

performance and security while using standard Power over Ethernet

(PoE). The new access point introduces Cisco M-Drive Technology that

enhances 802.11n performance. The Aironet 1140 is the only dual-radio

platform that combines full 802.11n Draft 2.0 performance (up to nine

times the throughput of existing 802.11a/g wireless networks) and

built-in security features using standard 802.3af Power over Ethernet.

ClientLink, a feature of Cisco M-Drive Technology, helps extend the

useful life of existing 802.11a/g devices with the use of beam forming

to improve the throughput for existing 802.11a/g devices and reduce

wireless coverage holes for legacy devices.

Miercom, an independent testing and analysis lab, tested ClientLink

and showed an increase of up to 65 percent in throughput for existing

802.11a/g devices connecting to a Cisco 802.11n network. Unlike other

solutions that do not offer performance improvements for legacy

devices, ClientLink delivers airtime connectivity fairness for both

802.11n and 802.11a/g devices. For the complete Miercom testing

methodology and results, download the ClientLink report at:

http://www.cisco.com/go/802.11n/

Learn more about the Cisco Aironet 1140 Series Access Point and Cisco

M-Drive Technology in a video with Chris Kozup, Cisco's senior manager

of mobility solutions.

More information at http://blogs.cisco.com/wireless/comments/cisco_taking_80211n_mainstream_with_aironet_1140.

XAware Releases XAware 5.2

XAware Releases XAware 5.2

XAware has released XAware 5.2, an open source data integration

solution for creating and managing composite data services. Working

with its user community, XAware has included upgrades that make it

easier to design and deliver data services for Service-Oriented

Architecture (SOA), Rich Internet Applications (RIA), and Software as a

Service (SaaS) applications.

The most notable addition to XAware 5.2 is the data-first design

feature. Leveraging two important aspects of the Eclipse Data Tools

Project, Connection Profiles and Data Source Explorer, XAware users

can now create data services by starting with data sources. This

option, often known as bottom-up design, is an ideal fit for data-oriented

developers and architects, especially those who need to combine data from

multiple sources. XAware also gives developers the option of starting the

design process with XML Schemas, an approach known as top-down design.

XAware has also introduced a new service design wizard that enables

new users to quickly build and test services with relational data

sources. Additional enhancements include a new outline view, improved

search functionality, greater run-time query control and updated

support.

XAware 5.2 is available for free use under the GPLv2 license and via a

commercial license. Services and support subscriptions are also

available for purchase from XAware, Inc.

Organizations are encouraged to take advantage of XAware's QuickStart

program, which includes training, consulting services, and an initial

period of active support from the XAware team. Starting at just $750,

QuickStart helps companies quickly start building composite data

services for SOA, RIA and SaaS projects.

For more information and to download XAware 5.2, please visit:

www.xaware.com.

Jaspersoft updates its BI Suite Community Edition

Jaspersoft updates its BI Suite Community Edition

In December, Jaspersoft upgraded its Business Intelligence Suite v3 in

both the Community and Professional Editions. The new release includes

advanced charting and visualization capabilities that supplement the

dynamic dashboards and interactive Web 2.0 interfaces introduced

earlier.

Specific features include new built-in chart types, the ability to

create and apply chart themes to customize the detailed appearance of

charts, and easy integration with third-party visualization engines.

Developers can use the new chart theme capability available in

Jaspersoft v3.1 to change the overall appearance of built-in charts

without having to write chart customizers or use an extensive set of

chart properties. Other features available in Jaspersoft v3.1 include

the recently announced certification for Sun's GlassFish application

server, and Section 508 compliance for U.S. government agencies.

Additional enhancements now available in Jaspersoft v3.1 include:

- professional edition localization packs now included in all

geographies at no additional charge, including English, French,

German, Spanish and Japanese

- data source support for calculated fields in the ad hoc query

metadata layer using the Jaspersoft expression language

- the latest version of the iReport graphical report design tool,

now running on the NetBeans platform

- portal support now available in the Community Edition, including

the JSR-168 compliant Jaspersoft portlet

- improved support for "Domain" metadata-driven ad hoc query from

the iReport advanced report design tool

Jaspersoft's BI Suite delivers on the promise of "Business

Intelligence for Everyone" by using Web 2.0 technologies coupled with

advanced metadata functionality. It includes an interactive Web 2.0

interface based on an AJAX framework, dynamic HTML, and other

technologies that deliver the ability to mash-up business intelligence

features to provide a seamless, cross-application, browser-based

experience. Jaspersoft makes it easy for anyone to build and update

dashboards in real-time, drag and drop information from multiple

sources, and build queries and reports with the click of a mouse.

Netbook Features Bootable OS on USB

Netbook Features Bootable OS on USB

EMTEC has announced its Gdium netbook computer which boasts a compact

size and light weight with 512 MB of RAM, a 10-inch screen size and a

full keyboard.

Among the open source applications included with Gdium are:

FireFox browser, Thunderbird e-mail client, Instant Messaging, VoIP,

Blog editor, audio/video players, security utilities, as well as the

Open Office suite of word processing, spreadsheets, and

presentations.

What makes Gdium unique is the G-Key, an 8 or 16 GB bootable USB key

on which the Mandriva Linux operating system, applications, and

personal data are stored. The G-Key allows each user to store their

personal info and preference securely, without leaving a trace on the

computer.

The Gdium will retail for under $400 and comes in 3 colors - White,

Black, and Pink. For more info, go to: http://www.gdium.com

Gdium.Com is also hosting the One Laptop Per Hacker (OLPH) program.

The OLPH Project provides a free infrastructure to individuals who

want to develop software for the Gdium platform. Gdium software can be

freely modified, adapted, optimized, or replaced; the user can

download and install new solution packs and freely modify most of it.

All interested developers can purchase an early release Gdium but must

take into account that these may have some some bugs and known issues.

OLPH Project members get one extra Gkey.

Openmoko Demos Distros on Open Mobile Phone

Openmoko Demos Distros on Open Mobile Phone

At the CES show in early January, Openmoko Inc, maker of fully open

mobile phone products, demonstrated several Linux distributions

running on the FreeRunner mobile hardware platform. These included the

Debian distro, community-driven FDOM, QT by Trolltech (recently

acquired by Nokia), and Google's Android.

In a video shot at CES 2009, William Lai of Openmoko discusses the

power of Open Source community-driven development for new mobile

applications and devices. Click here to watch:

http://www.youtube.com/watch?v=8R4KvJv6xSE

In addition to its role as a mobile phone, developers are embracing

the open hardware, open software, and open CAD of the Openmoko platform

to create new embedded consumer products.

Openmoko is both a commercial and community driven effort to create

open mobile products that consumers can personalize, much like a

computer. Openmoko is dedicated to bringing freedom and flexibility to

consumer electronics and vertical market devices.

www.openmoko.com.

Next Gen Cfengine 3 released

Next Gen Cfengine 3 released

Cfengine is an automated suite of programs for configuring and

maintaining Unix and Linux computers. It has been used on computing

arrays of up to 20,000 computers since 1993 by a wide range of

organizations. Cfengine is supported by active research and was the

first autonomic, hands-free management system for Unix-like operating

systems.

Cfengine 3.0.0 is a new and substantial rewrite of cfengine's

technology, building on core principles used over the past 15 years,

and extending them with technology inspired by Promise Theory.

(Promise theory describes policy-governed services in a framework of

autonomous agents. It is a framework for analyzing models of modern

networking and was developed at the University of Oslo.)

The new cfengine enhances its support of configuration management

with:

- A brand new language with a simple uniform syntax and powerful

templating.

- Integrated self-diagnostics and automated self-healing

documentation.

- Generic file editing with multiple models.

- Pattern matching and design features to match any environment,

large and small.

- Integrated knowledge management using topic maps.

- Support available from cfengine AS at http://www.cfengine.com.

- Upcoming Enterprise version in 2009 with extended features.

- GPLv3 code.

Cfengine 3 also offers full integration with existing cfengine 2

systems and auto-encapsulation of cfengine 2 for incremental upgrades.

Mark Burgess, author of Cfengine, recently gave a presentation on

Promise Theory and Cfengine 3 at Google on his way to LISA 08. It is

now available at YouTube: http://www.youtube.com/watch?v=4CCXs4Om5pY

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

rI18N or The Real Internationalization Project

By Anonymous

My article "Keymap Blues in Ubuntu's Text Console" in LG#157 left a poster in LG#158

a bit annoyed.

He is saying that I didn't do this or didn't do that. And he

is right, I did not.

Specifically, I don't feel capable of proposing "[...] a good

consistent solution to all the woes of the Linux console."

Please address that challenge to Linus Torvalds.

I am, however, willing to take up a smaller challenge posited by that

poster: namely "[...] a sample keymap which is 'sized down' and [fits]

the author's needs".

Fine - let's go for it. As noted before, we are discussing the text console, no X

involved.

1 What has to be included?

The text console keymap covers, inter alia, the self-insertion

keys that we need to enter text. These keys vary a lot from country to

country, so I'm going to leave them out. I'm not even going to try defining

them for the US default keymap. The real concern, when considering text

mode applications, are the 'functional keys'.

2 What are functional keys?

This is a term I'm using for lack of anything better.

Alternative suggestions are welcome.

Functional keys are defined here by enumeration. The names

for the keys come from the physical keyboard I'm typing this

article on. They are quite common, actually:

F1 F2 F3 F4 F5 F6 F7 F8 F9 F10 F11 F12

Tab Backspace PrintScreen/Sys Rq Pause/Break

Insert Home PageUp Delete End PageDown

Up

Left Right

Down

3 Is anything missing?

You could argue that other keys should also be in the set 'functional

keys'. For instance, Escape or Enter, or the modifier keys Shift, Ctrl,

Alt. The reason they are not in the set is that they are not troublesome. I

have checked the default keymaps offered by the kbd project for US,

Germany, France, Italy, Spain, and Russia, and I would say these extra keys

are safe. They are already consistent, and the differences are practically

irrelevant.

4 Terminology

Again, a note on terminology: keymap normally refers to a

file where the key assignments are defined. The assignments can

refer to plain keys, but they can also refer to modified keys, e.g.

<ctrl><left>. In the keymap (the file!), a table of assignments

for given modifiers is also called a keymap, so we get a keymap for

<ctrl>, a keymap for <alt> and so on.

Additionally, you'll need to keep in mind the difference between key names

and assigned keymap variables. Examples:

| variable 'Delete' | is distinct from the key Delete |

| variable 'BackSpace' | is distinct from the key Backspace |

| variable 'F14' | does not need a physical key F14 |

5 The approach via multiple strings

What you see, especially in Ubuntu (implying Debian, although

I have not checked), is that the modifier keymaps rely on

multiple strings. Examples:

| Keys | Assignments |

| <f4> | F4 |

| <shift><f4> | F14 |

| <ctrl><f4> | F24 |

| <shift><ctrl><f4> | F34 |

| <altgr><f4> | F44 |

| <shift><altgr><f4> | F54 |

| <altgr><ctrl><f4> | F64 |

| <shift><altgr<ctrl><f4> | F74 |

Variables F4 to F74 would deliver strings to the application

expecting keyboard input and the application could then take

action. The funny thing is that Ubuntu only has strings for

F4 and F14, while F24 to F74 are empty, and no action can be

taken on receiving the empty string.

This is, however, not the point here. The point is: is it a

good idea to define all those keys via strings?

All those variables up to F256 are inherited from Unix. They were meant to

make the keyboard flexible - i.e. customizable - on a case-by-case basis

without assuming consensus. Unix and consensus don't mix. Everybody was

welcome to do with those variables whatever they wanted, and there is old

software that relies on such flexibility: define F74 in the keymap, and

you are going to touch somebody.

6 The approach via modifier status

There is a way to recognize, for example, <ctrl><f4> even if it has no

unique string attached to it. It must have a string, of course - otherwise

it would be ignored when the keyboard is in translation mode (either ASCII

or UTF-8), which is the normal case. The approach relies on just reading

the status of the modifiers - pressed down or not. All the modified keys

get the same string as the plain key and then you find out about the

modifier status. Example:

| Keys | Assignments |

| <f4> | F4 |

| <shift><f4> | F4 |

| <ctrl><f4> | F4 |

| <shift><ctrl><f4> | F4 |

| <altgr><f4> | F4 |

| <shift><altgr><f4> | F4 |

| <altgr><ctrl><f4> | F4 |

| <shift><altgr><ctrl><f4> | F4 |

You want to know if <ctrl><f4> was received? Check the input

for the F4 string, then read the status of <ctrl>. If <ctrl> is

pressed you got <ctrl><f4>; if not, you got <f4>.

Nice, isn't it? Not among the Unixsaurs. You see, reading

the modifier status is a Linux specialty. Even the Linux

manpage for ioctl_codes, where the trick is explained, gives

a strong warning against their use and recommends POSIX

functions. The catch is there are no such POSIX functions - so you

either use the Linux IOCTLs or you're out of luck.

Ah, I hear, but that's not platform neutral. So what? Go through the source

code of any text console editor and count the pre-processor directives that

are there to accommodate peculiarities of Unix variants 1-999. There are

also pre-processor directives to accommodate Linux, modifier status and

all. If Midnight Commander can do it, why not others?

There are text console editors that use the modifiers for

their Windows version but not for their Linux version. Why

not? Because Windows delivers the key and the modifier at

once, while Linux needs distinct commands, one to read the

key, one to read the modifier. Therefore there is a slight

time difference between the results - and theoretically, a risk

of incurring an error. A lame excuse: when the two commands are

next to each other in the source code, that error will never

materialize. We are talking about micro-seconds.

My choice is to use plain keys everywhere in the set of functional keys

whatever the modifiers may be, except the <ctrl><alt> combo

which will be reserved for system operations like switching consoles.

7 Which modifiers do we reasonably need?

How many keymaps do you need in the keymap? (If you're confused, please

review the terminology warning above.) Ubuntu has 64 keymaps in

the keymap, a mighty overkill. Fedora and OpenSUSE are a lot

more reasonable. I'll stick close to their version:

| plain | 0 |

| <shift> | 1 |

| <altgr> | 2 |

| <ctrl> | 4 |

| <shift><ctrl> | 5 |

| <altgr><ctrl> | 6 |

| <alt> | 8 |

| <ctrl><alt> | 12 |

This choice gives the entry keymaps 0-2,4-6,8,12 in the keymap (the file)

with a total of 8 keymaps (the assignment tables). As already mentioned,

Ubuntu has keymaps 0-63.

Note that defining 8 keymaps does not preclude defining

more. But those 8 keymaps should be defined as we dare

to propose here.

Note also to the users of the US keyboard: <altgr> is

nothing more than the Alt key on the right side, which must be

kept distinct since it plays a role on non-US keyboards.

8 Control characters 28-31

The characters 28-31, which are control codes, are

desperately difficult to find on non-US keyboards. All the

mnemonics implied by their name get lost. Besides, they also

get shifted and are awkward to generate.

These are Control_backslash, Control_bracketright,

Control_underscore, Control_asciicircum. A language and

keyboard neutral solution could be as follows:

| Name | Code | Assignment |

| Control_backslash | char. 28 | <ctrl><8> on numeric keypad |

| Control_bracketright | char. 29 | <ctrl><9> on numeric keypad |

| Control_underscore | char. 30 | <ctrl><0> on numeric keypad |

| Control_asciicircum | char. 31 | <ctrl><1> on numeric keypad |

9 Immediate and likely effects

The immediate effects of the proposed partial keymap for

functional keys concern system operations:

-

changing console

This would work with <ctr><alt><f1>, etc. - the same

way it works under X - instead of <alt><f1> etc.

-

previous/next console

This would become <ctrl><alt><left> and <ctrl><alt><right>

instead of <alt><left> and <alt><right>.

-

scrolling text in console

No more <shift><pageup> and <shift><pagedown>;

this would be <ctrl><alt><pageup> and

<ctrl><alt><pagedown>.

This would seem to conflict with DOSEMU - but it doesn't, because DOSEMU

uses raw keyboard mode.

The non-immediate effects depend on text mode applications

following Midnight Commander's example and using the Linux

ioctls to read the modifiers status.

If it spreads then it would be normal to move to the start of a

buffer with <ctrl><home> while <home> takes you to the

start of the line. To move to the next word <ctrl><right> would

be available. And you could highlight a selection pushing <shift> and

moving the cursor. Last but not least, a large number of keybindings based

on F1-F12 would become available and they would be language and country

independent!

To anybody who only has experience with the US keyboard running the US

default keymap, please try Nano on a Spanish or French keyboard. When you

are done, please come back and agree with me that this little partial

keymap should be called rI18N or the Real

Internationalization Project.

10 The goodies

So, after all those clarifications, here is the partial keymap for

the functional keys.

Talkback: Discuss this article with The Answer Gang

A. N. Onymous has been writing for LG since the early days - generally by

sneaking in at night and leaving a variety of articles on the Editor's

desk. A man (woman?) of mystery, claiming no credit and hiding in

darkness... probably something to do with large amounts of treasure in an

ancient Mayan temple and a beautiful dark-eyed woman with a snake tattoo

winding down from her left hip. Or maybe he just treasures his privacy. In

any case, we're grateful for his contributions.

A. N. Onymous has been writing for LG since the early days - generally by

sneaking in at night and leaving a variety of articles on the Editor's

desk. A man (woman?) of mystery, claiming no credit and hiding in

darkness... probably something to do with large amounts of treasure in an

ancient Mayan temple and a beautiful dark-eyed woman with a snake tattoo

winding down from her left hip. Or maybe he just treasures his privacy. In

any case, we're grateful for his contributions.

-- Editor, Linux Gazette

Copyright © 2009, Anonymous. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 159 of Linux Gazette, February 2009

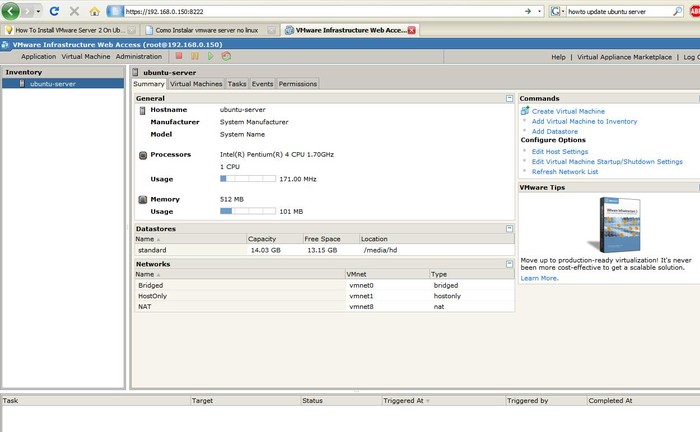

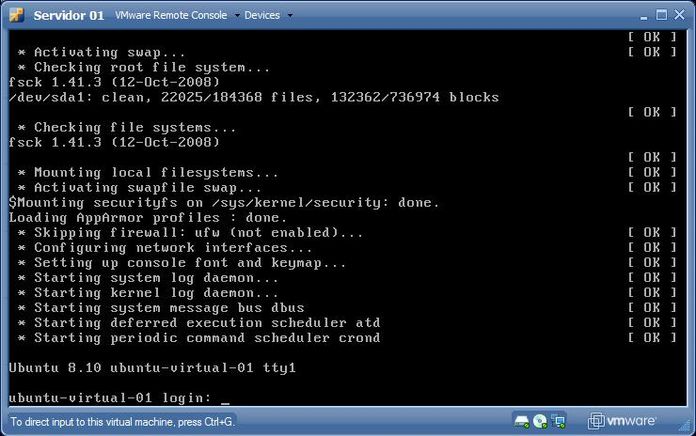

Installing VMWare Server 2 on Ubuntu Server 8.10 (Intrepid Ibex)

By Deividson Luiz Okopnik

1. Introduction

Finding reliable information about turning an Ubuntu Server

installation into a Virtualization Server is not an easy task, and if

you - like me - are going for a command-line only server, you will find

this guide extremely useful.

VMWare Server 2 is a very good, free alternative to virtualization

from VMWare - a company that has always been a leading provider

in the virtualization arena. VMWare Server 2 requires a license

number for installation, but this license can be freely obtained after

registering at the VMWare page.

This product offers a solution that allows, among other things, the

creation of headless servers. These are completely administrable via a

browser, including creating virtual machines, powering up or down, and even

command-line access.

Please note that while this article is aimed at a clean Ubuntu

Server installation, most of the information contained within can be

used on any modern distribution, whether command-line only or GUI.

2. Obtaining VMWare Server 2

To obtain the VMWare Server 2, you need to register at the VMWare

Web page, http://www.vmware.com/products/server/, by

clicking on the "Download" link.

After you submit your data, you will receive an e-mail with the serial

numbers needed to activate your account - both on Windows and on a Linux

host - and the download links. In this article, we will install using the

VMWare Server 2 tar package, so go ahead and download it - get the one that

fits your computer architecture (32 or 64 bit) - and save it on the

computer where you want to install it. I will use "/home/deivid/" as the

file location in the next few steps - change it to reflect the actual

location where you saved the file.

3. Installing VMWare Server 2

First things first. To install VMWare Server 2, you need to install

three packages: build-essential, linux-headers-server, and xinetd. If

linux-headers-server does not point to the headers of the kernel you are

using, install the correct ones. I had to install

"linux-headers-2.6.27-7-server". You can check what kernel version you

are currently running with "uname -r".

You can install these packages by using:

sudo apt-get install build-essential xinetd linux-headers-$(uname -r)

After you install the required packages, go to the folder where VMWare

Server's tar package was saved, unpack it, and execute the install

script as follows:

tar xvzf VMware-server-*.tar.gz

cd vmware-server-distrib

sudo ./vmware-install.pl

The install script will ask you some questions - where to install the

files and docs, the current location of some files on your system, etc. On

all of those questions, you can accept the default option by pressing

"Enter". On the EULA screen, you can quit reading it by pressing "q", but

you'll need to type "yes" then press "Enter" to accept it.

The next questions will be about the location of the current kernel

header include files, so the installer can use them to compile some modules

for you. The usual location is "/usr/src/linux-headers-<kernel

version>/include" - for example,

"/usr/src/linux-headers-2.6.27-7-server/include". After that, some files

will be compiled, and the installer will ask several more questions - but

again, the defaults all work fine.

After that, the service will be installed and running and you can access

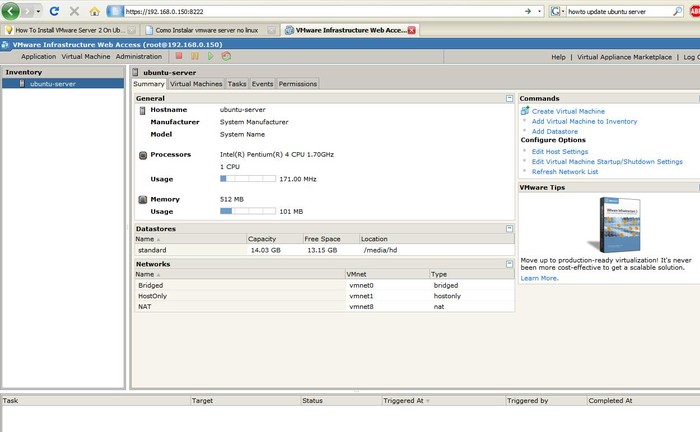

the control interface via any Web browser, accessing <server

ip>:8222 - for example, 192.168.0.150:8222. Please note that on

Firefox, there will be a warning about this site's certificate, but it's

safe to add an exception to it for this particular use.

To log in, by default you use the "root" account and

password of the machine it's running on. With Ubuntu, you need to set a root

password first - easily be done via the command "sudo passwd root". You

can give permissions to other users in the "Permissions" link of the Web

interface.

All the virtual machine administration can be done via this Web

interface, including virtual machine creation, boot-up, access, and

shut-down.

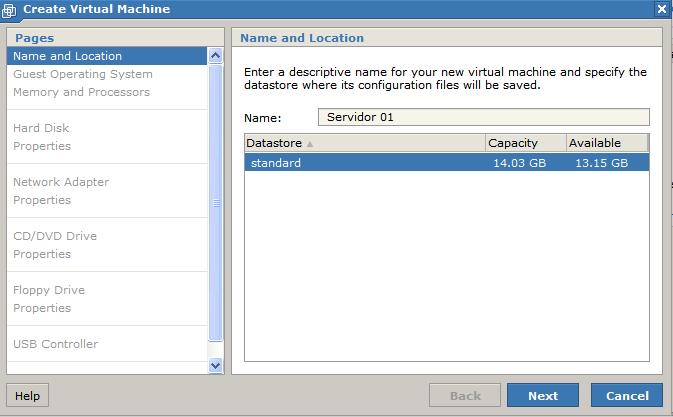

4. Creating a Virtual Machine

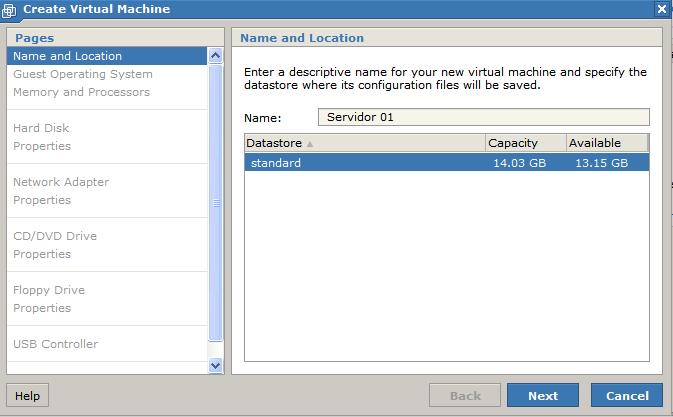

The process to create a virtual machine is pretty simple. Just click

the "Create Virtual Machine" link in the web interface, and follow the

on-screen instructions.

Here's a description of some of the data the system asks for during the

installation:

- Name

- Virtual machine name

- Datastore

- Location where the virtual machine data will be stored inside the computer

(configured during the VMWare Server 2 installation)

- Guest Operating System

- the OS that will run inside the virtual machine

In the next few steps, you configure the specifications of the virtual

machine, including the amount of RAM and number of processors, capacity of

the HD and the location where the data will be stored, details about the Network

adapter, CD-ROM, floppy drives, USB controllers, etc. Configure

accordingly with what you will need in the virtual machine.

In the Networking configuration dialog, you have three options for a network

connection:

- Host Only

- Direct connection to the host machine (host X VM only)

- Bridged

- Gives the virtual machine a real IP in the external network

via Bridging

- NAT

- The virtual machine can access the external network via a NAT

table without having its own external IP

After you've completed all of the above configuration, the Virtual

Machine will be created.

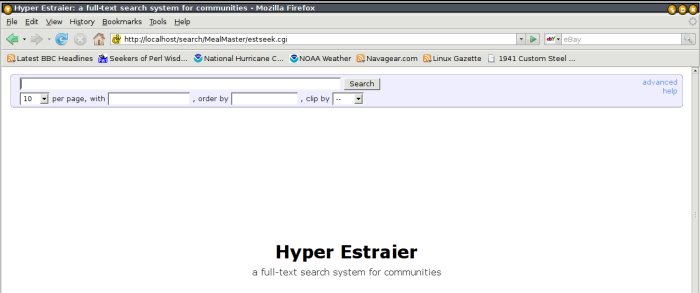

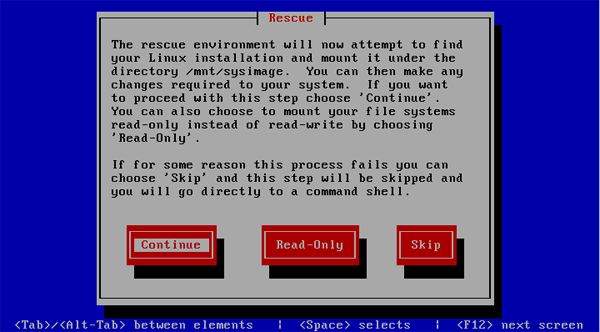

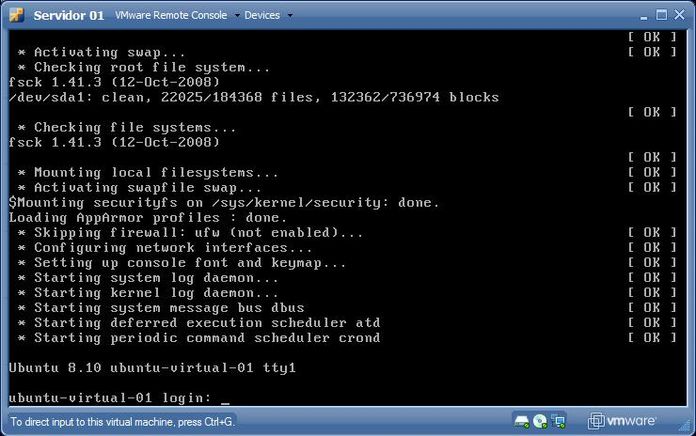

5. Accessing a Virtual Machine

All the access to the virtual machine, as previously mentioned, is done

via the Web interface. To power up the machine, you select it in the menu

and press the "Play" button at the top of the window - other buttons

are used to power-down and reboot it.

To gain access to the virtual machine console (e.g., to install an

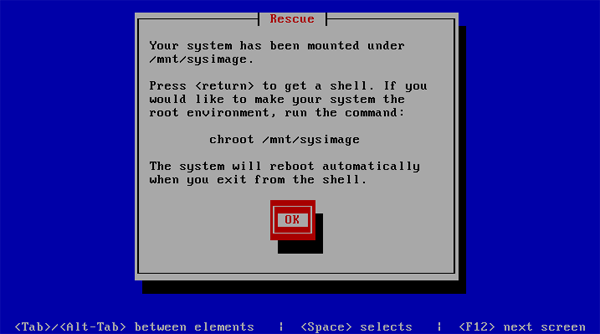

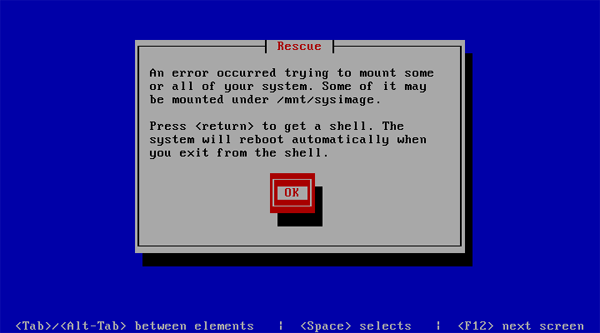

Operational System), after the machine is powered on, click on the

"Console" link. Note that you will have to install a browser plug-in the

first time you do so, but the installation is pretty straight-forward

(click "Install Plug-in" and follow your browser's instructions - it's also

needed to reboot the browser after the plug-in installation).

After that you can use that "Console" link to have access to the

computer. Operating System installation on a virtual machine goes as if

you were using a normal computer, so if needed, you can use any article

about installing the operating system of your choice.

6. Summary

Virtualization is an important topic in computing, and is getting

more and more popular lately. However, finding specific information -

like how to make use of virtualization on a command-line only server, is a

bit tricky. That is the gap this article has tried to fill - and I hope you

(readers) can make a good use of it.

Here are links for some pages that might be useful:

Ubuntu Server Installation Guide: https://help.ubuntu.com/8.10/serverguide/C/installing-from-cd.html

VMWare Server 2: http://www.vmware.com/products/server/

"Any intelligent fool can make things bigger, more complex, and more violent. It takes a touch of genius - and a lot of courage - to move in the opposite direction."

-- Albert Einstein

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Copyright © 2009, Deividson Luiz Okopnik. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 159 of Linux Gazette, February 2009

Away Mission: 2008 in Review - part 1

By Howard Dyckoff

The past year saw a number of conference trends. Many formerly large

conferences have layered related topics and sub-conferences in order to

hold on to their audiences. LinuxWorld, for example, added mobility tracks

and the "Next Generation Data Center" conference for the last two years and

now has changed its name to OpenSource World for 2009. USENIX events also

had many overlapping related conferences occurring alongside its major

events.

But 2008 generally saw established conferences shrinking due to tighter

budgets, increasing travel costs, and the deepening recession. Balancing

this trend, there were many more tightly focused events springing out of

the myriad of Open Source Communities. There were also many more on-line

"virtual" conferences that could satisfy keynote attendees (although, in

this reporter's opinion, the limited expo venues associated with these

virtual events just can't replace the sights, sounds, and swag of a real

expo.)

An example of such a virtual conference was the SOA and Virtualization

events by TechTarget, IDG and ZDnet. Here's a recent one:

http://datacenterdecisions.techtarget.com/seminars/AEV-virtualseminar.html

Along the same lines, certain conferences added or expanded video coverage,

often for a lower fee than full conference attendance, allowing people to

attend 'on-line'. Several USENIX events had live video feeds provided by Linux-Magazin.de, and these are

available as an archive for review by attendees. This approach means no

conference bag or networking opportunities, but definitely is easier on

both the budget and the body.

Many newer conferences such as QCon and Best SD Practices for SW developers

and project managers saw modest growth.

The Leviathan - Oracle OpenWorld

One of the largest events just keeps getting larger: Oracle OpenWorld (OOW). It

had over 42,000 attendees with multliple overlapping sub-conferences such

as Oracle Develop and the JDEdwards, Siebel, and BEA conferences. In fact

it shifted into the September timeframe that BEA used for its annual user

conference, abandoning Oracle's traditional early November timeframe.

New this year was a comprehensive video and session material archive. This

was a joint venture between Oracle and Altus Learning Systems, which

provides digital media production services to companies for internal and

external use.

There were 1900 technical and general sessions and these are all archived.

Additionally, all Open Oracle World (OOW) keynotes are fully archived, with

searchable text transcripts and all slides timed and reposted. This

allows a user to search for a phrase in the transcript, then view the

accompanying slide and hear the associated audio. This could be a good

way to jog one's memory months after attending the conference, as well as

exploring sessions not attended by quickly scanning for desired content.

Altus is hoping this makes access and repurposing of content easier for users

of their service.

I was given an opportunity to discuss the multimedia archive with some of

the principals before Oracle OpenWorld. One of the key points of the

discussion was that only a limited sampling of sessions would be available

as a teaser to the public; most of these would be the keynote sessions that

are usually available on-line after a conference. I had specifically asked

about having one or two of these sample sessions being about Linux or

Oracle Open Source collaborations and was assured at least one such session

would be available for preview. But, as fate is fickle, my contact spoke to

me shortly after Oracle OpenWorld (OOW) and said the chosen Linux session

would only be available for a short time, not past LG's next publication

date in October.

Here is the Aldus OOW preview page, which also hosts most of the OOW

keynotes: http://ondemandpreview.vportal.net/

Now the flip side of this: Apparently, if you did not attend the 2008

event, you can get all media at the Oracle OpenWorld OnDemand portal but

have to pay US$700. OOW-08 attendees can use the OOW 'course catalog' to

get only the slides for most presentations - or pay $400 to access the

portal. This is very different from previous years where slides were

made available to the public on the Oracle Technical Network (OTN).

However, in a publicly viewable thread, the following information is

available:

2008 OpenWorld Presentations: http://www28.cplan.com/cc208/login.jsp

(Login/password: cboracle/oraclec6)

Use this for login and for accessing each presentation.

Using that info, you can download a presentation on the Coherence

Application Server Grid:

http://www28.cplan.com/cbo_export/208/PS_S299531_299531_208-1_v1.pdf

To get a multi-media view of this material, this video covers Oracle

Coherence Data Grid - formerly the Tangorsol product line:

http://www.youtube.com/watch?v=4Sq45B8wAXc&feature=channel_page

To access the Linux-oriented presenatations from the OOW catalog, go to the

portal link, login, and then select "Linux and Virtualization" from only

the focus area pull-down, set the other pull-downs to "All".

Here is a link to the rather extensive OOW "Unconference" listed on the OOW

wiki: http://wiki.oracle.com/page/Oracle+OpenWorld+Unconference?t=anon

The big event at OOW was the announcement of the Oracle-HP eXaData database

machine. Jet black in its rack and wearing the new "X" logo, it was

pretty and powerful with an impressive set of specs. But it also sported a

huge price tag, over a million dollars for starters, which effectively meant it

competed with the high end SMP boxes like Convex and Teradata. It is very

fast on very, very big databases, just the kind of thing that services like

NASDAQ need and can afford.

The magic came from blending disks with Intel Xeon multi-core processors

and an Oracle secret sauce that ran a lite version of Oracle to produce

parallel query processing. That also meant the new hardware needed to talk

to an Oracle-like DB as the controller, and there were a lot of Oracle

licenses included for the disk-and-CPU array. I believe some of the newer

storage arrays, coupled with FOSS databases, can produce decent performance

at similar or lower costs per transaction. (Check out Sun's ZFS-based storage

devices, running with Postgres or MySQL.)

Another major announcement was the new Oracle Beehive collaboration suite.

The goal is to help organizations secure communications and add

collaboration into business processes. Oracle Beehive is an open-standards

based enterprise collaboration platform, with integrated workspaces,

calendar, instant messaging, and e-mail.

This is a link to the PDF for the session introducing Beehive:

http://gpjco.edgeboss.net/download/gpjco/oracle/course_html/2008_09_22/S298423.pdf

One great change is that the bigger Oracle Develop sub-conference was at